Tech Topic | December 2015 Hearing Review

Research suggests that cognition, hearing, and other sensory experiences are intertwined. To achieve the best hearing support for individuals with hearing loss, hearing instrument signal processing should support the brain’s natural functions. This paper describes two aspects of hearing instrument signal processing that are important for providing the most advantageous auditory input to the individual, when higher processes and cognition are considered. Examples of how ReSound is meeting these needs with current technology are also provided.

Research into cognition and its role in hearing is abundant across several scientific fields. We are delving to a deeper level of understanding in the auditory pathway—far beyond the level of the cochlea—to arrive at new and interesting discoveries of how the auditory system plays a role in how we experience the world around us.

For example, researchers have asserted that the visual cortex of the brain integrates not only visual inputs from the eyes, but auditory inputs as well.1 Through functional MRI, brain activity was viewed while blindfolded participants listened to birds, traffic noise, and crowd sounds. Simply by listening to these sounds without visual input, activity was observed in the early visual cortex. The researchers asserted that “sounds create visual imagery, mental images and automatic projections” that help people understand and make sense of their surroundings.

The multisensory connections continue with another study in which participants reported seafood tasted better while listening to ocean sounds, even if no actual ocean was nearby.2 Together, these studies combining vision, auditory processing organization, and taste with inputs from the ears or hearing indicate that multiple stimuli to the brain can impact a sensory experience.

A study article in Neuron noted that the precise rhythm of electrical impulses from the inner hair cells of mice early in life aid in the development of tonotopic auditory pathway organization.3 This is most interesting since this development occurs before the mice are able to hear external sounds. The implications of this research point to higher auditory system involvement and processing development, prior to any actual hearing input.

It is widely accepted that the auditory pathway from the cochlea to the brain is a complex and highly sophisticated system. Research has also shown that the sounds we hear can impact other sensory experiences as well. With this in mind, modern hearing instruments are reaching a higher level of sophistication to provide the individual with more usable and true information with which to understand the auditory scene.

This paper will describe two aspects of hearing instrument signal processing that can provide the most advantageous hearing input to the individual, along with examples of the technology available through ReSound to meet these needs.

Cognition and Directionality

Directionality in hearing instruments is widely regarded as a way to improve the signal-to-noise ratio (SNR) in noisy listening environments. Generally, many current directional technologies aim to amplify the loudest speech signal originating from the front, back, or sides more than other signals surrounding the hearing instrument user. While this paradigm can succeed in making the loudest speaker more audible in these difficult listening situations, it does not account for the cognitive or higher level processes occurring in the brain.

Auditory scene analysis describes the way in which the brain organizes acoustic inputs from each ear to provide a mental model of the listening environment.4 To paint a realistic and usable soundscape, the brain detects new sounds as they occur, and then chooses whether they are salient. For example, in the detection mode, a new sound in the environment may catch a listener’s attention. This could be someone whispering behind you in a theater. Next, the brain must choose whether to continue paying attention to the novel sound. This is a higher level process, by which the sound is analyzed for its importance. In the example of the novel whisper in the theater, this sound would be most likely ignored. However, if the whisper included the listener’s name, the listener would probably choose to pay attention to the sound. In both cases, the brain is choosing what is most important at the time. It is a transparent and quick process, but one that is beneficial in making sense of the sound environment while alerting to interesting or threatening signals.

With auditory scene analysis in mind, certain problems arise for directionality. First, processing that focuses on the loudest speech signal in the environment makes an assumption that this sound is the most important to the listener. While this may be true in some cases, it is not in others. Second, it impairs the natural detect-and-choose processes in the brain by reducing audibility for sounds other than the loudest speaker. This effectively reduces the listener’s ability to monitor the listening situation, or to pick up on new voices or signals that may not be the loudest, but may still be important to hear.

In addition, hearing instrument users in directional mode may feel more “cut off” from the listening environment, as if they are listening “in a tunnel.” A complaint with conventional forward-facing directionality may be “I can hear the person across from me at a restaurant, but I can’t hear the people to my sides.”

The challenge in helping hearing instrument users hear well in difficult, noisy environments extends beyond simply improving the SNR for the dominant speech signal. It also includes maintaining a sense of auditory awareness to other, less dominant sound inputs—be it a chair sliding behind the listener, or a softer voice that the listener would prefer to hear more than the loudest person in the room. It includes being able to participate in a lively conversation around a table in a crowded restaurant, not just in front of the listener. In other words, the real challenge can be expressed as how hearing instrument directionality can be used to support auditory scene analysis in the most natural way.

Enhanced binaural directional strategies. When designing a directionality algorithm to support natural auditory scene analysis, it is imperative to look not only at performance within the sound booth, but also at user performance and preference during wear-time. For example, a directional beamformer response may produce astounding SNR improvements when the speech is spatially separated from the noise in a sound-treated clinic booth; however, in the real world, this same response may have less impressive performance, or may cut the listener off from other sounds that may be important to hear.

ReSound wanted to provide listeners with the best of both scenarios, so that natural cognitive processes in auditory scene analysis could occur. ReSound Binaural Directionality (and Binaural Directionality II) offers bilateral omnidirectional, bilateral directional, and asymmetric directional responses to either side, based on environmental analysis of the sound environment. Each of these microphone modes has support in varied listening environments. Listeners prefer bilateral omnidirectionality in quiet.5,6 Bilateral directionality provides the greatest benefit in noise when facing the speaker.7 Asymmetric directionality improves ease of listening,8 while not significantly reducing directional benefit.8-10 Further, asymmetric directionality can provide the best intelligibility when speech is to one side of the listener, via an omnidirectional response to the speech side and a directional response to the noisy side. This places the directional null where there is the largest concentration of noise.11-13 The wireless exchange of environmental acoustic information via 2.4 GHz enables the hearing instruments to automatically select the most advantageous directionality configuration.

How does Binaural Directionality support cognitive processes, helping individuals detect and choose which signals are worthy of their focus? The key to this support is the availability of an omnidirectional response. This occurs in the bilateral omnidirectional configuration, as well as both asymmetric directional configurations, with omnidirectionality to either side. The omnidirectional response to either or both sides allows the listener to be fully aware of the soundscape in order to choose where to focus attention or turn their head. However, even a bilateral directional response in Binaural Directionality provides auditory awareness for low frequencies. This is due to the integrated bandsplit directionality in every ReSound directional mode, which provides an omnidirectional response for low frequencies and a directional response for high frequencies.

The result is that hearing instrument users are able to function more normally in a complex, noisy environment, as they can choose which signal is most important, without the hearing instrument choosing for them. Instead of receiving only the limited input provided by a narrow directional beam to one angle, the user is more aware of the entire listening environment. The combination of environmental analysis and bandsplit directionality enables Binaural Directionality to promote better audibility for the surroundings—which allows for natural auditory scene analysis performed in the user’s brain to occur.

Cognition and Localization

Localization is a component of spatial hearing, which is the accurate perception of auditory objects and their relationships with each another in the auditory space. Spatial hearing is optimized when multiple localization cues, provided to the brain, are in agreement: interaural time differences (ITD), interaural level differences (ILD), and monaural spectral cues.

ITDs provide cues related to the time of arrival of sounds at each ear, and are largely influential for the localization of low frequency signals. ILDs provide cues based on the intensity differences for a sound arriving at each ear, and play a greater role in localization for high frequencies. Monaural spectral cues are derived from the pinna effects at each ear. When a listener enjoys the congruence of all localization cues, spatial hearing is realized, and sound quality is better preserved.

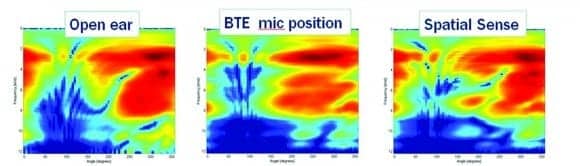

Figure 1. Attenuation of a multi-frequency signal rotated around the head and measured at the right ear. Red indicates the least amount of attenuation, and blue indicates the greatest amount of attenuation of the signal. Patterns of attenuation are the products of head-shadow and pinna effects.

Figure 1 plots attenuation patterns for a constant-intensity broadband signal rotated around the head. Measurement occurs at the coupler inside the right ear of a KEMAR manikin. The least amount of attenuation, depicted in red, occurs when the signal is closest to the measurement ear. The greatest attenuation occurs when the signal farthest from the measurement ear, and is depicted in blue. Head-shadow effects are largely responsible for the attenuation at the left side of the head, and monaural pinna cue effects are evidenced in the pattern from the front to the back of the right side of the head. These intensity changes are predominant in the higher frequencies, which appear as the outer rings in the figure.

Figure 2 re-plots the polar graph of Figure 1 to an x-y graph, where the x-axis is the azimuth of signal rotation and the y-axis is frequency (inverse order). Differences in amplitude are again plotted by color. The left panel of Figure 2 shows the same data as Figure 1 for the unaided ear.

Figure 2. Attenuation of a multi-frequency signal rotating around the head for the open ear, BTE microphone position, and ReSound Spatial Sense. All measurements occur at the right ear.

Hearing instrument users with traditional signal processing sometimes describe all sounds as originating within their heads, or as if they are listening to sounds via basic headphones. This is a common perception when localization cues are distorted via unnatural microphone location and/or wide dynamic range compression (WDRC). Hearing instrument microphone location behind or above the pinna can distort the monaural spectral cues and ILDs the brain uses to localize sounds and promote a realistic representation of auditory objects in the listening environment. This distortion of the unaided ear’s natural spectral and ILD cues is apparent in the differences between the graphs in the center panel of Figure 2, with the BTE microphone location, and the left panel for the open ear.

ILDs can also be distorted by WDRC processing in hearing instruments. With WDRC processing, softer sounds are amplified more than louder sounds. When a sound originates from the right side of the head, the head shadow effect will cause the sound arriving at the left ear to be at a lower intensity level than at the right ear. As a result, more gain will be applied to the softer sound at the left ear than to the louder sound at the right ear. This means that the relative difference in level of the sound at each eardrum will be smaller than would occur with the open ear. Thus, the ILD is smaller than what would occur with the open ear.

Restoring natural pinna cues with Spatial Sense. ReSound Spatial Sense is designed to restore the natural cues the brain needs to localize sounds in the environment. Spatial Sense combines a pinna restoration algorithm with binaural alignment of compression, for a more natural representation of sound locations in the auditory space. As shown in the far right panel of Figure 2, the pinna restoration cues provided by Spatial Sense provide a more similar response to the open ear spectral response (far left panel of Figure 2).

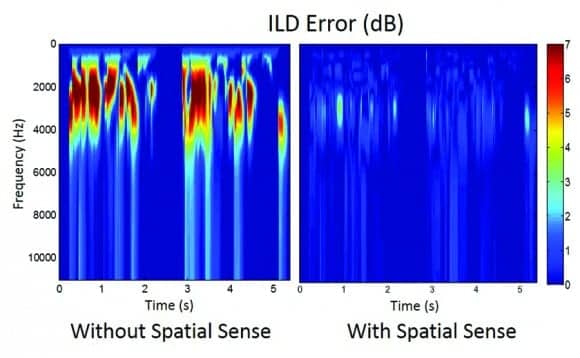

The binaural compression component of Spatial Sense ensures that the ILD cues for a sound originating closer to one ear than the other are maintained. This reduction of ILD error is shown in Figure 3 for a male speech signal. Areas in red indicate a larger dB ILD error; areas in blue signify minimal to no error.

Due to the restoration of monaural spectral cues and preservation of between-ear intensity differences, Spatial Sense can promote better sound quality and spatial hearing abilities. Test participants in a single-blinded crossover design rated “ease of listening” higher on the Speech, Spatial and Qualities of Hearing test (SSQ)14 with Spatial Sense than without it. In addition, tonal quality on a subjective rating questionnaire was rated higher when Spatial Sense was activated (J Groth, unpublished data, 2015).

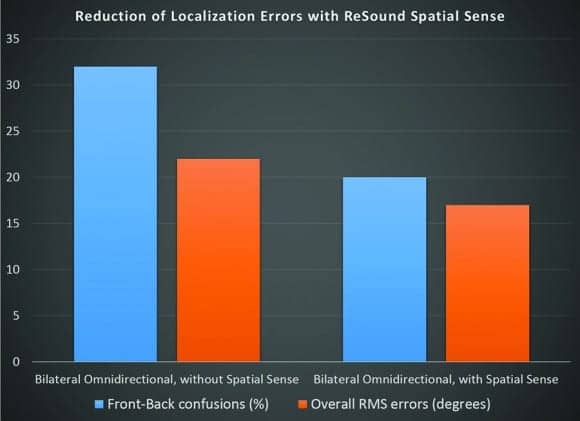

Figure 4. Reduction of localization errors with ReSound Spatial Sense, as compared to a standard bilateral omnidirectional response.

The localization improvements provided by Spatial Sense are evidenced by a reduction in the percentage of front-to-back confusions, and in fewer localization errors for sounds originating from different azimuths around the listener. Figure 4 shows these improvements, as compared to a traditional bilateral omnidirectional response.

Spatial Sense can be thought of as emulating the natural open ear response for device styles that have microphone placement above the pinna. Therefore, it is available as an alternative to an omnidirectional response for both ears in BTE and RIE device styles. It also replaces the bilateral omnidirectional condition as part of Binaural Directionality II.

Users spend around 70% of their time in quieter environments,15 and in these environments, omnidirectional responses are typically preferred by users.5,6 This preference is likely due to better sound quality and better audibility for the surrounding environment. It is for these reasons that Spatial Sense fits well into the Binaural Directionality strategy, which utilizes a bilateral omnidirectional response according to the demands of the listening environment. At time of writing, ReSound LiNX2 and ENZO2 feature Spatial Sense.

Summary

The brain integrates the input of many senses into a higher mental construct, to better understand and navigate the world around us. Hearing technology that supports the natural processes in the brain to make sense of the environment can provide improvements and benefits in sound quality, auditory awareness, and localization. Current hearing instrument technology, such as that provided by ReSound signal processing, demonstrates that hearing instruments are making strides in this direction.

References

-

Vetter P, Smit FW, Muckli L. Decoding sound and imagery content in early visual cortex. Current Biology. 2014:24(11);1256-62.

-

Stuckey B. Taste: Surprising Stories and Science about Why Food Tastes Good. New York: Atria Books;2013.

-

Clause A, Kim G, Sonntag M, Weisz CJC, Vetter DE, Rubsamen R, Kandler K. The precise temporal pattern of prehearing spontaneous activity is necessary for tonotopic map refinement. Neuron. 2014: 82;822-35.

-

Bregman AS. Auditory Scene Analysis. Cambridge, Mass: MIT Press; 1990.

-

Walden B, Surr R, Cord M, Dyrlund O. Predicting hearing aid microphone preference in everyday listening. J Am Acad Audiol. 2004:15;365-396.

-

Walden B, Surr R, Cord M, Grant K, Summers V, Dittberner A. The robustness of hearing aid microphone preferences in everyday environments. J Am Acad Audiol. 2007:18;358-379.

-

Hornsby B. Effects of noise configuration and noise type on binaural benefit with asymmetric directional fittings. Paper presented at: 155th Meeting of the Acoustical Society of America; June 30-July 4, 2008; Paris, France.

-

Cord MT, Walden BE, Surr RK, Dittberner AB. Field evaluation of an asymmetric directional microphone fitting. J Am Acad Audiol. 2007:18;245-256.

-

Bentler RA, Egge JLM, Tubbs JL, Dittberner AB, Flamme GA. Quantification of directional benefit across different polar response patterns. J Am Acad Audiol. 2004:15;649-659.

-

Kim JS, Bryan MF. The effects of asymmetric directional microphone fittings on acceptance of background noise. Int J Audiol. 2011:50;290-296.

-

Hornsby B, Ricketts T. Effects of noise source configuration on directional benefit using symmetric and asymmetric directional hearing aid fittings. Ear Hear. 2007:28;177-86.

-

Coughlin M, Hallenbeck S, Whitmer W, Dittberner A, Bondy J. Directional benefit and signal-of-interest location. Paper presented at: American Academy of Audiology Convention; 2008; Charlotte, NC.

-

Cord MT, Surr RK, Walden BE, Dittberner AB. Ear asymmetries and asymmetric directional microphone hearing aid fittings. Am J Audiol. 2011:20;111-122.

-

Gatehouse S, Noble W. The Speech, Spatial and Qualities of Hearing Scale. Int J Audiol. 2004: 43(2); 85-99.

-

Walden B, Surr R, Cord M, Dyrlund O. Predicting hearing aid microphone preference in everyday listening. J Am Acad Audiol. 2004:15;365-96.

Tammara Stender, AuD, is manager at GN ReSound Global Audiology in Glenview, Ill.

John A. Nelson, PhD, is vice president of Audiology and Professional Services at ReSound North America in Bloomington, Minn.

Correspondence can be addressed to HR or Dr Stender at: [email protected]

Original citation for this article: Stender T, Nelson JA. Cognitive Research on Hearing: Toward Implementation in Hearing Instruments. Hearing Review. 2015;22(12):36.