Tech Topic | April 2018 Hearing Review

A look into the present and future of hearing aid processing and post-fitting adjustments for patients.

We are truly entering the machine- learning era. In tasks with well-defined boundaries, machine-learning systems query data and learn from it. Machine learning is appearing in data security, financial trading, fraud detection, language processing, healthcare, and more applications are predicted.1

While machine learning could revolutionize audiology, hearing care professionals (HCP) will not become redundant because any machine-learning approach depends upon real-life data generated by human activity.

The digital revolution in the ‘80s and ‘90s spawned the modern digital hearing aid as we know it today. It has become the way of producing a hearing aid with amazing capabilities. Modern digital signal processing made noise reduction, beamforming, feedback cancellation, speech enhancement, etc…, standard in any hearing aid.

All of these new capabilities also introduced complexity. Modern hearing aids have become so complex that adjusting them precisely to meet individual needs can be challenging. Yet this is what HCPs aim to do at each fitting. And they are good at it!

Audiological machine-learning solutions that can query data and learn based on experience could help end-users and clinicians alike. Machine learning can potentially provide solutions to assist in-clinic hearing aid fitting and help end-users in situations where it is not always possible for their audiologist to be there to assist.

In this article, we will shed light on the mystified machine-learning “black box,” define and help to understand machine learning, and explore a possible system that relies on machine learning tailored for hearing aid personalization—a system that uses sophisticated statistical modeling techniques. We will also demonstrate the benefits that such a system can give us and discuss possibilities for our profession from an end-user, HCP, and manufacturer perspective.

What is Machine Learning?

Machine learning is a subject area in computer science in which systems or algorithms are designed to learn from collected data, often with the goal of predicting outcomes for unseen input data.

Today, we are in the infancy of the machine-dlearning era, but some believe that artificial intelligence (AI) building upon machine learning will soon be on par with human-level intelligence. What some people tend to forget when speculating about AI, is that machine learning is driven by data—data that humans directly or indirectly generate. AI, machine learning, and deep learning are closely related concepts.

Machine learning and deep learning are subfields of AI (Figure 1). Humans can learn on their own from very few examples, which is something that AI cannot do. In tightly constrained tasks, we can build machine-learning systems that are able to query data intelligently on its own and learn from these. Google DeepMind’s “Q-learning” is a good example. Q-learning learns to play Atari video games by itself. It relies on deep neural networks that can learn very complicated structures and strategies from massive amounts of data in finite time using reinforcement learning that enables the system to calculate a strategy for trying out different possible solutions, many of which are not successful. The result is a system that can learn while experimenting, although still within strictly constrained domains compared to what human-level intelligence can comprehend. So, while machine learning could revolutionize hearing aids, it will still be the clinician who solves new, slightly perturbed versions of the complex problem of adjusting hearing aids.

Figure 1. Machine learning is a subfield within artificial intelligence. Deep learning is a kind of machine learning well-suited for possibilities like feature extraction.

Before looking further into machine learning and hearing care, it is worth clarifying the difference between machine learning and “big data” (see “Quick Facts” sidebar). It is often believed that machine learning involves “big data”—huge amounts of data that reside in data centers waiting to be used for learning about hidden patterns and user behavior. This belief is wrong. It is correct that machine learning is often a significant ingredient in “big-data” applications, but there is a multitude of machine-learning systems which do not use “big data” at all. These systems use machine learning to intelligently learn, not only how to deliver a single optimal outcome, but a lot of other related learnings along the way. These learnings can later be collected and used to optimize the system itself, but this collection of data is not essential to define a system as using machine learning.

Machine Learning in Hearing Aids

The problem of personalizing via systematic adjustment of parameters has been studied considerably over the last three decades. For hearing aid personalization, the earliest attempts used a modified simplex procedure2,3 and later, genetic algorithms4-7 to optimize parameters based on responses from users. While this was shown to provide improvements—even with few system parameters—both methods require an unrealistically high number of end-user interactions to get to a meaningful result for the hearing aid listener.

One way to solve this is by applying machine learning to optimize system settings. This has been attempted in hearing aid research and development. Typically, one of two approaches is taken:

1) Using machine learning as a way to analyze data or sound files from the lab,8 or

2) Applying machine learning in a real-time approach in optimizing hearing aid settings.9,10

While the latter approach brings the power of machine learning directly to the end-user by taking it out of the lab and into a real-life setting, we have yet to see this applied in a commercially available hearing aid.

Using Machine Learning to Navigate User Intentions

Real-life hearing presents challenges we aim to solve for users with hearing loss. Hearing in real life is constantly changing in context and circumstance, because real life itself changes from one moment to the next. Widex sound automation is driven by the assumption that an end-user, who is able to think less about the setting of the hearing aid and what program to choose in a given listening situation, will be able to devote more cognitive resources to the task of listening.11

Modern hearing aids have reached a stage where they can easily detect what auditory environment the user is in at any given time. Therefore, it is paramount that, for most of the time, the end-user can rely on the hearing aid automation to approximate an ideal setting.

But any automatic system and/or environment adaptation is built on assumptions about what to amplify and what not to amplify, and even the best available automatic system faces one major challenge: Knowing exactly what is important, in any given auditory scenario, for each specific user.

Although automatic adaptive systems have proven to be effective, they rely on assumptions regarding what is important in any given auditory scenario for each specific user. The reality, though, is that users’ intentions may vary in any given auditory scenario. In a social situation, a user might prefer to increase the conversation level and lower background music—or something completely different. The individual’s intention of what they wish to achieve relative to an auditory-related task is what we call auditory intention.

Today, a 2.4 GHz connection between a smartphone and hearing aids is common and has opened the door for novel developments, especially regarding hearing aid user interactions via smartphone. These interactions range from allowing basic hearing aid adjustments, directly streaming music/speech, all the way through ecological momentary assessment. The processing power of the smartphone also presents the potential to run powerful algorithms that previously needed the computing power of dedicated, standalone PCs. Consequently, we can provide complex control in a manner intuitive to the user—a chance to interpret the users’ auditory intention.

Auditory Intention

Hearing aid personalization, more commonly referred to as hearing aid fitting and fine-tuning, is carried out today using predefined prescription rules followed by what often amounts to several fine-tuning attempts.12 A prescription rule defines gain and compression settings of hearing aids based on the user’s audiogram. The deterministic prescriptions are developed based on decades of research studying the human auditory system and on empirical and practical experience.13 Generally, the purpose of any prescription is to make speech audible to the user. However, the prescriptive rules are based on the idea that, given the audiogram, one size fits all.

During the fine-tuning procedure (Figure 2), the user’s intention is translated twice for every hearing aid adjustment. The first translation is the often-ambiguous explanation by the user about how the sound is perceived and whether this perception involves an actual problem. The second translation occurs when the HCP seeks to understand what problems the user is describing.

Figure 2. Any user will at any given time have an auditory intention and a related ideal setting of the hearing aid. Here, that ideal setting is exemplified with the colored space. In a perfect hearing-aid personalization, the hearing aid will adjust for the auditory intention of the user (1) to drive new settings in the hearing aid (2) until the auditory scenario gives need for another new ideal setting matched to the auditory intention of the user (3). Adapted with permission from Nielsen et al.9

Therefore, a lot can get lost in translation even before the clinician considers what the problem is with the current hearing aid setting; in most cases, they must resort to a knowledge-based, trial-and-error approach to get closer to more personalized hearing aids.

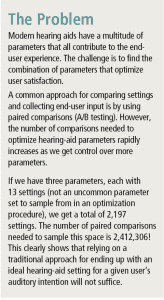

The above process also assumes that the user reports that there is a problem with the current setting. Hearing aid users, especially first-time users, are not always aware that constant and valuable feedback from them is key to obtaining good hearing aid performance, benefit, and satisfaction (see sidebar, “The Problem”).

Therefore, at least two problems persist with the existing hearing-aid personalization routine. First, the vast number of adjustable parameters embedded in digital hearing aids makes manual, trial-and-error approaches extremely difficult to keep track of in practice. Second, the entire feedback link from the user, through the HCP, to the adjustment made in the hearing aids, entails a risk of errors in the translations, and, as a result, non-optimal adjustments being made.

Another level of hearing aid personalization happens with the current level of end-user control. This can happen via program selection on the device, via remote controls, or, more recently, through mobile-phone applications. Looking at how end-users apply programs in their everyday real life shows that users interact with these very differently.14 Consequently, we have end-users who display unique behaviors in using hearing aid program controls—getting custom settings made via fine-tuning during consultations—and their auditory intention indeed manifests as individual preferences and behaviors.

A Widex Machine Learning Application

As we have seen, hearing aid personalization, both in the clinic and in real-life usage, can be complicated. Users show individual differences influenced by unique auditory scenarios and varying auditory intentions. Add to this the immense number of parameters available in a modern hearing aid.

To bridge the gap between auditory scenario, auditory intention, and hearing aid parameters, we suggest a solution that builds on machine learning. With machine learning access through, for example, smartphones, we can quickly adapt advanced hearing aid settings, all driven by the end-user’s preferences and intentions.

To allow for easy user interaction with this machine-learning solution, we propose applying a simple, well-tested interface, where multiple parameters can adapt to user auditory intention in a few simple steps. Through a step-wise approach, the system collects observations based on A/B comparisons and makes predictions about the user’s preferred setting for a given auditory intention.

Hence we look at both the user input we already have, and optimize the next A/B trial setting to limit the amount of steps needed to reach an ideal hearing aid setting (Figure 3). All the end-user must do is keep his/her auditory intention in mind and rate suggested settings accordingly. The machine learning algorithm takes care of the rest.

Figure 3. Conceptual overview of the Widex machine-learning approach. The user provides input to the system in steps of paired comparisons, moving closer to the ideal setting with each step. Adapted with permission from Nielsen et al.15

Through previous research16 and recent verification testing at Widex, we have seen promising results as to what can be achieved by empowering our users with machine learning using a familiar data-collection method. This system is capable of reaching a recommended setting for the end-user’s listening intention in around 20 comparisons—representing a huge reduction from the actual 2,412,306 paired comparisons needed if we were to apply a more traditional approach.

Summary

Machine learning is an extremely interesting technology for both hearing aid development and real-time, end-user interaction. Machine learning has the potential to address some of the current issues in hearing-aid fitting, such as translating user comments to meaningful and effective hearing-aid settings, as well as accounting for auditory intention in a given auditory scenario, all factors which could limit end-user satisfaction. Addressing these issues will help both audiologists as well as end-users.

Looking into the near future, we see machine learning applied in hearing aids, allowing the end-user to quickly modify hearing aid settings to accommodate auditory intention and improve satisfaction.

Correspondence can be addressed to HR or Oliver Townend at: [email protected]

Original citation for this article: Townend O, Nielsen JB, Ramsgaard J. Real-life applications of machine learning in hearing aids. Hearing Review. 2018;25(4):34-37.

Biography: Oliver Townend, BSc, is Audiologist and Audiology Communications Manager; Jens Brehm Nielsen, PhD, is Architect, Data Science & Machine Learning; and Jesper Ramsgaard, MA, is Audiological & Evidence Specialist at Widex A/S in Lynge, Denmark.

References

-

Marr B. The top 10 AI and machine learning use cases everyone should know about. September 30, 2016. Available at: https://www.forbes.com/sites/bernardmarr/2016/09/30/what-are-the-top-10-use-cases-for-machine-learning-and-ai/#1e3d326594c9

-

Neuman AC, Levitt H, Mills R, Schwander T. An evaluation of three adaptive hearing aid selection strategies. J Acoust Soc Am. 1987;82(6):1967-1976.

-

Kuk FK, Pape NMC. The reliability of a modified simplex procedure in hearing aid frequency-response selection. J Sp Lang Hear Res. 1992;35(2):418-429.

-

Takagi H, Ohsaki M. IEC-based hearing aid fitting. In: IEEE SMC ‘99 Conference Proceedings. Tokyo, Japan. October 12-15,1999;3:657-662.

-

Durant EA, Wakefield GH, Van Tasell DJ, Rickert ME. Efficient perceptual tuning of hearing aids with genetic algorithms. IEEE Transactions on Speech and Audio Processing. 2004;12(2):144-155.

-

Baskent D, Eiler CL, Edwards B. Using genetic algorithms with subjective input from human subjects: Implications for fitting hearing aids and cochlear implants. Ear Hear. 2007;28(3):370–380.

-

Dijkstra TMH, Ypma A, de Vries B, Leenen JRGM. The learning hearing aid: Common-sense reasoning in hearing aid circuits. Hearing Review. 2007;14(11)[Oct]:34-51.

-

Wang D. Deep learning reinvents the hearing aid. December 6, 2016. Available at: https://spectrum.ieee.org/consumer-electronics/audiovideo/deep-learning-reinvents-the-hearing-aid

-

Nielsen JB, Nielsen J, Sand Jensen B, Larsen J. Hearing aid personalization. In: Proceedings from the 3rd NIPS Workshop on Machine Learning and Interpretation in Neuroimaging; December 5-10, 2013; Lake Tahoe, NV.

-

Aldaz G, Puria S, Leifer LJ. Smartphone-based system for learning and inferring hearing aid settings. J Am Acad Audiol. 2016;27(9):732-749.

-

Kuk F. Going BEYOND: A testament of progressive innovation. Hearing Review. 2017;24(1)[Suppl]:3-21.

-

Dillon H. Hearing Aids. New York, NY: Thieme Medical Publishers;2012.

-

Humes LE. Evolution of prescriptive fitting approaches. Am J Audiol. 1996;5:19-23.

-

Johansen B, Petersen MK, Korzepa MJ, Larsen J, Pontoppidan NH, Larsen JE. Personalizing the fitting of hearing aids by learning contextual preferences from Internet of Things data. Computers. 2017;7(1):1.

-

Nielsen JBB, Nielsen J, Larsen J. Perception-based personalization of hearing aids using gaussian processes and active learning. IEEE/ACM Transactions on Audio, Speech, and Language Processing. 2014;23(1):162-173.

-

Nielsen JB. Systems for personalization of hearing instruments—A machine learning approach [PhD thesis]. Danish Technical University (DTU);2014. Available at: http://www.forskningsdatabasen.dk/en/catalog/2389473298

Interesting article, with this new and improved technology, machine learning can potentially provide solutions to in clinic hearing aid fitting and help end users in situations where it is not always possible for their audiologist to be there to assist them.