Inside the Research | November 2019 Hearing Review

Of all the audiologists I’ve met in the last 35 years, there is one who is so vibrant, entertaining, smart, witty, expressive, compassionate, and nurturing, that simply mentioning her name in some circles makes everyone smile. Carol Flexer, PhD, is that person. Dr Flexer is an expert in the development and expansion of listening, speaking, and literacy skills in infants and children, including those with all degrees of hearing loss. Licensed as an audiologist and the auditory-verbal therapist (LSLS Cert. AVT), she is a Distinguished Professor Emeritus at The University of Akron’s School of Speech-Language Pathology and Audiology, and she has authored more than 155 publications, including 14 books. She is also a past-president of the Educational Audiology Association, the American Academy of Audiology, and the AG Bell Association for the Deaf and Hard of Hearing Academy for Listening and Spoken Language.

More recently, Dr Flexer co-authored the fourth edition of her and Elizabeth Cole’s book, Children With Hearing Loss: Developing Listening and Talking, Birth to Six,1 now available from Plural Publishing. We thought this provided a good opportunity to catch up with Dr Flexer and her thoughts about pediatric hearing loss and language development.

Beck: Hi Carol! It is always a joy to speak with you! Congratulations on the fourth edition of Children With Hearing Loss.

Flexer: Hi Doug! Thanks very much. Elizabeth and I are very excited to have the fourth edition published.

Beck: And just a quick note about your co-author, Elizabeth B. Cole, EdD, who unfortunately is not joining us today. Elizabeth was a professor at McGill University in Montreal and former Director of CREC Soundbridge in Connecticut. I should note she is also an auditory-verbal therapist, a French teacher, and a Peace Corps volunteer.

Flexer: That’s right. Elizabeth and I have collaborated on many books and projects, and she is a good friend and a joy to work with. In this fourth edition, we divided the book into two parts. Part One is titled “Audiological and Technological Foundations of Auditory Brain Development,” which I wrote, and Part Two is “Developmental, Family-Focused Instruction for Listening and Spoken Language Enrichment,” which Elizabeth wrote.

Beck: Excellent. In the preface to the fourth edition, you report some 12,000 babies are identified annually with hearing loss in the USA. Further, about another 6,000 children less than 3 years of age, who passed their newborn hearing screening, develop hearing loss every year. So in total, the population of children newly identified with hearing loss every year in the USA is approximately 18,000?

Flexer: That’s right. No one knows the exact numbers, but many sources indicate the numbers you’ve mentioned are correct. Now that we know how vast this population is, it’s very important—as professionals and as parents—to identify these babies and treat them as early as possible.

Beck: That makes very good sense. The idea of waiting and watching is not in the child’s best interest, as it takes years of listening before one learns to talk and read. In the central auditory nervous system, development and integration of neural pathways and language development are tremendously interwoven. That is, for children with hearing loss, auditory information may not reach the brain, or may be distorted by the time it reaches the brain, rendering a much more difficult language learning experience if the hearing loss is left untreated.

Flexer: Exactly, and untreated hearing loss can lead to auditory and knowledge deficits. Reduced or distorted auditory information to the brain can result in compromised or negatively impacted speech, language, academic, and social skills, which can be substantial and may last a lifetime.

Beck: And, unfortunately, most parents are not prepared for hearing loss, as 95% of all children with hearing loss are born to parents with normal hearing. Further, depending on which professionals are consulted, the parents may or may not receive current information about the most effective way to manage hearing loss for each individual child to reach the desired outcomes expressed by the family.

Flexer: Yes, and Doug, as you and I have discussed for a long time now,2 the only reason to manage hearing loss through hearing aids, cochlear implants, FM systems, digital remote mics (DRMs), and other hearing technologies is to access the brain with auditory information. And so, when we’re working with parents it’s important to carefully steer the conversation from vowels and consonants and audiograms to the brain, and the brain’s primary role in listening and learning.

Not that the ear isn’t important; of course it is…but having a discussion about the brain may be more impactful and meaningful to the parents, as we discuss how the child learns to listen in order to understand spoken conversations.

In fact, I remind parents that five senses capture different types of raw environmental information and transform that information into neural impulses read by the brain: sight, hearing, smell, taste, and touch. As such, I refer to the ear as the “doorway to the brain” for auditory information and I say hearing loss is something that blocks the doorway. And so, our goal is to breach that doorway, as early as possible, with the best technology for their child. That might be a hearing aid, a bone-anchored device, a cochlear implant, or perhaps an auditory brainstem implant, and/or an FM or digital remote microphone (DRM) system…it all depends on the specific anatomy and needs of their child. There are no shortcuts. Each child needs to be evaluated professionally and thoroughly to determine the best habilitative and rehabilitative strategies and technologies for that particular child.

Beck: How do you view the importance of DRMs for children?

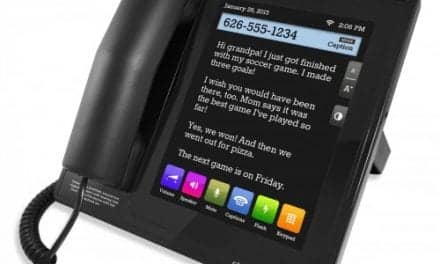

Flexer: All hearing systems benefit from the addition of a remote microphone system, and of course that includes FM or the DRM systems. In your interview with Dr Jane Madell [in the July 2019 Hearing Review],3 she reported that all adults and all children with hearing and/or listening problems require and will benefit from remote microphones, and I absolutely agree. It’s all about improving the signal-to-noise ratio (SNR). Even though all systems can make sounds louder (ie, audible), the end-game is improving the brain’s ability to listen, and that is absolutely dependent on a very high-quality sound delivered at a maximal SNR. Digital remote mics provide that solution.

Beck: And to be clear, when the SNR is maximal and the quality of sound is excellent, that does improve the ability of the listener to understand speech in noise (SIN). As such, we actually do have reasonably priced, portable solutions that enhance SIN ability, but many professionals are reluctant to demo, instruct, and sell remote microphone technology.

Flexer: I think everyone benefits from remote mics, even babies. In fact, babies should be fitted with remote microphones soon after receiving their primary technology.

Professionals tend to think their patient would never use assistive device technologies—and that’s true if the patient doesn’t know it exists! But a simple demo of a remote mic system can make all the difference in the world, and effective use of the remote microphone will always require reminders and coaching, for adults and children.

Emerging studies clearly demonstrate that the use of remote mics vastly increases the child’s exposure to words and language. We should not sell remote mics as an accessory; they should be included in the acquisition of hearing aids as a brain development tool. Further, DRMs are needed not just at school; they are needed at home, at play, while watching TV, socializing, and more. And by the way, DRMs are included in just about all cochlear implant systems, which attests to their usefulness. I think we should do the same for hearing aids.

Beck: I agree, and further, imagine what would have happened if Steve Jobs had shown the iPhone and its texting function in 2007, and said “This is our new idea. You won’t need to (or want to) answer the phone, you just type using your thumbs…” Nobody would have thought it was a good idea, and very likely people would have said it’s crazy, inconvenient, and too expensive. In fact, there is a Steve Jobs quote that says, “A lot of times, people don’t know what they want until you show it to them.”4 Obviously, texting caught on and dominates much of our communication.

I would argue it’s very much the same for DRMs. HCPs should include DRMs in every hearing aid sale; not as an option, but as a necessary accessory. We know that when hearing aids are expertly fitted by an HCP, the benefits are substantial. Yet, we also know that given a noisy one-on-one conversation in a restaurant or cocktail party, hearing aids alone cannot always provide a beneficial signal-to-noise ratio (SNR).

However, DRMs can provide an extra 12-15 dB SNR—so why not demo it, include it, and solve the problem for most of the patients, most of the time? When we include DRMs we have literally provided the patient with the tools they need to succeed in noise. DRMs have the ability to change their observation from “I cannot understand speech in noise” to “When I am in noise, this is the tool I need to understand.” Further, when the patient comes back for a hearing aid check and reports their ongoing difficulty with speech in noise, imagine how satisfying it would be to say “did you use the remote mic?” And, of course, they will say “no.” And then instead of trying to sell them something else, you reinstruct, demo, and you might (gently) remind the patient, that you included the DRM in their purchase because every now and then, it’s absolutely necessary. Of course, some won’t use it, but most will once they know how to use it. Importantly, you provided them with a tool to manage their speech in noise problem.

Flexer: And to round out our discussion of SNR and DRMs, it’s critical to understand that children need an additional SNR of 15-20 dB more than normal-hearing adults to perceive, process, and remember speech, because they are learning language and building their brains, and because they don’t yet have the neural “top-down capacity” of adults. And, importantly, hearing is a first-order event, such that hearing—or the reception of auditory information—must occur if the brain is to perceive, listen, and learn spoken language and gain knowledge.

Beck: Thanks Carol. I’d like to conclude by quoting you: “The fitting and use of hearing aids, cochlear implants and remote mic technologies are critical first steps in attaining the desired outcomes of listening, talking, reading and learning.”1 That says it all!

Flexer: Thanks Doug. I appreciate your interest in the book and I’ll look forward to the next time we get together!

Correspondence can be addressed to Dr Beck at: [email protected]

Original citation for this article: Beck DL. Children and the development of listening and talking skills: An interview with Carol Flexer, PhD. Hearing Review. 2019;26(11):38-40.

References

-

Cole EB, Flexer C. Children with Hearing Loss: Developing Listening and Talking, Birth to Six. 4th ed. San Diego,CA: Plural Publishing;2020.

-

Beck DL, Flexer C. Listening is where hearing meets brain…in children and adults. Hearing Review.2011;18(2):30-35.

-

Beck D. Addressing the needs of pediatric patients and their parents: An interview with Jane Madell, PhD. Hearing Review. 2019;26(7):30-31.

-

Mui C. Five dangerous lessons to learn from Steve Jobs. Forbes. https://www.forbes.com/sites/chunkamui/2011/10/17/five-dangerous-lessons-to-learn-from-steve-jobs/#767834f33a95. Published October 17, 2011.