This braininess is also reflected in UTD/Callier’s audiology faculty, hearing scientists, and even their students. For example, if you’re inclined to think an AuD or PhD might be too small-minded an endeavor for your gigantic cranial capacity, at UTD/Callier you have the option of obtaining two doctoral degrees at once—the AuD-PhD—in which case, you would enjoy the title of “Doctor-Doctor.” But obtaining the degree doesn’t exactly entail a series of sleeper courses. Audiology students at the university have their curriculum presented by such notable audiologists and hearing scientists as George Gerken, PhD, Aage Møller, PhD, James Jerger, PhD, Susan Jerger, PhD, Ross Roeser, PhD, Michael Kilgard, PhD, Peter Assmann, PhD, Anu Sharma, PhD, Linda Thibodeau, PhD, and Emily Tobey, PhD, to name only a few. Likewise, students interested in topics like clinical and pediatric audiology, behavioral acoustics, tinnitus, or neurophysiology can draw on the talents of a large pool of researchers like Jackie Clark, PhD, Philip Loizou, PhD, and Gary Wright, PhD, who are working in the clinics and labs.

To a big extent, the history and focus of UTD/Callier reflects its Texas surroundings. UTD was essentially started in the 1960s in response to the need by Texas Instruments (TI, then called Geophysical Services Inc) for better homegrown, educated engineers. Today, there are more than 900 high-tech companies within a 5-mile radius of the UTD campus, and the university has fostered close relationships with many of them.

“The history we have is an indication of our strengths,” says UTD/ Callier Director Ross Roeser, PhD. “First, we’re located in an area that focuses on high tech and telecommunications. UTD is a non-traditional university that concentrates primarily on computer technology and engineering, and there’s no question that this gives us a distinct advantage. Second, being located near the campus of a major medical school [the Univ of Texas Southwestern Medical Center], we also have excellent interactions with various medical specialties and can draw on the considerable talents of people like Angela Shoup, PhD, Peter Roland, MD, Mike Devous, PhD, and Paul Bauer, MD. Third, we have the benefit of having a preschool and clinic within the Callier Center itself that directly links our researchers to the patient population we’re serving. And, finally, the new Advanced Hearing Research Center building and our laboratories at the UTD main campus provide us with an excellent, highly interactive environment in which to conduct research.”

In the 1960s, a Dallas woman named Lena Callier who had a hearing impairment established a trust and designated that it be used to address hearing loss in the state of Texas. The late Aram Glorig, MD, the first director of the Callier Center, was asked to be the founding director and organize the new programs. The doors opened in 1969, and in 1975, the center merged with UTD’s School of Human Development.

Roeser has dedicated the last 20 years to creating a multidisciplinary faculty focused on excellent education, clinical care, and groundbreaking research. In recent years, UTD/Callier has also benefitted from a variety of funding sources, including numerous NIH grants and private contributions.

In June, ground was broken on the new $5 million, 23,500-sq.-ft. UTD/Callier-Richardson satellite facility (about 20 miles north of Callier Center) on the UTD campus, which will allow further expansion of the Center’s clinical services and research labs. The facility is expected to open in fall 2003. “The expansion of the Callier Center’s presence beyond its current location near downtown Dallas will help us extend outreach activities, foster cooperative efforts with other UTD Schools, and forge collaborative ties with the hundreds of high-tech firms in close proximity to the new location,” says Bert Moore, PhD, dean of UTD’s School of Human Development.

“A lot of the expansion and staff additions are directly attributable to the support we’ve received from the university’s administration and our dean,” says Roeser. “These people are truly focused on developing quality faculty and research. And, as people have been identified, we’ve been able to court them and convince them that this is a place that would be good for their careers and personal lives. The administration has a high regard for talent, and it speaks volumes for them—as well as the respect they have for our staff. As you talk with the various faculty, for example, what you’ll find is that we have over $2 million in extramurally funded research, which also shows the administration that we are responsible and capable of providing first-tier research.”

Research at UTD/Callier

The following is an update on a few of the research activities at UTD/Callier, and is intended only as a sampling of the projects being undertaken. Many other distinguished scientists and clinicians are involved in important work that could not be covered here due to space restrictions.

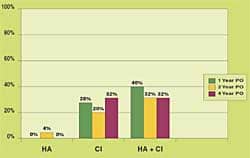

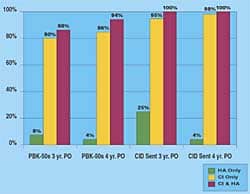

Acquisition of auditory/language skills in children: Recently, Emily Toby, PhD was the co-investigator in a 5-year study with Ann Geers, PhD, of the Central Institute for the Deaf (CID), St Louis that looked at the speech, language, and reading competence of 181 children ages 8-9 in 33 states and 5 provinces. Each child had been deafened before age 3 and implanted by age 5, and all had used a cochlear implant (CI) for more than 3 years. They found that deaf children who used CIs exhibited improved performance over what had been observed in deaf children who used hearing aids.

“Basically, what we found in this study,” says Tobey, “was one independent variable that predicated performance was the child’s IQ. Unfortunately, that’s something a practitioner really can’t do much about. However, the strong individual predicators were all of those factors you would imagine with a CI, except one: we thought that the duration of implant use would be very important. Instead, what was important was the amount of time a child had with SPECTRA or the newest speech processing strategy. The study implied that having good insertion so all the electrodes are active; having a lot of professional experience and being up-to-date on the latest technology; having good dynamic range and settings for the patient’s loudness growth—all things that an audiologist is responsible for doing—are very strong predictors for a child’s later performance. So the role of the audiologist is key to the entire cochlear implantation process.”

In following up this study, Tobey has joined a team of researchers from six universities who will analyze the development of language, speech perception, quality of life, and psychosocial development in younger children, possibly as young as 9 months (the CID study was limited to FDA guidelines of children implanted at age 2 or older).

In conjunction with researchers at the Univ. of Texas at Austin, Tobey is also conducting an NIH-funded study that looks at the possibility of a critical development period for the acquisition of auditory, speech perception, and language skills. Recent research indicates that a critical developmental period may take place during the first year of life. “The question is, Are there non-invasive techniques that we could use to determine how well a baby is using auditory information within the first year of life?” says Tobey. The researchers are making vocal recordings of young hearing-impaired babies and normal-hearing babies in the first 3 years of life. Tobey says the vocalizations are fairly alike until 8-10 months of age, at which time normal-hearing babies begin to combine different sounds. This fails to occur in deaf babies during this period. Is it possible that one of the reasons speech is so difficult for many deaf children who receive CIs is that they miss out on this critical development stage?

“We don’t exactly know how information from a cochlear implant influences the earliest stages of vocal development, and that’s what we hope to address in this study,” says Tobey. “For example, once an infant is identified with hearing loss at 2 months of age, we should also be able to track whether that baby is getting perceptual benefit from the hearing aid by about 8 months of age. Presumably, those babies who are not getting benefit would then be candidates for cochlear implants.” The team hopes their research will lead to an early check-list that helps audiologists and parents make better informed decisions based on the baby’s vocal behaviors.

Auditory neuropathy and cochlear implants: Anu Sharma, PhD, is researching auditory neuropathy. Her objective is to provide more information for parents of children who have auditory neuropathy, giving them a better idea on useful approaches relative to intervention strategies. Working with Jon Shallop, PhD, [see article on the Mayo Clinic and auditory neuropathy in the Sept. 2001 HR, pages 24-26] during the Mayo Clinic Parents’ Conference, she collected a large number of interesting case studies.

“Yvonne Sinninger has suggested that that one in 10 children who have sensorineural hearing loss may also be diagnosed with auditory neuropathy,” says Sharma. "A really interesting question is, ‘Of these children, who would benefit from a cochlear implant?’ My goal is to collect data on central auditory maturation from children who have auditory neuropathy and, in real time, provide guidance to hearing care professionals and parents on the best course of action."

With auditory neuropathy, it’s not always clear if the patient actually has a cortical deficit relative to auditory stimulus, says Sharma. Additionally, many of these children seem to hear well, but they just don’t understand what is being said. Sharma and her colleagues are trying to determine if this acoustic stimulation is enough for the cortex to develop, and they are analyzing what happens to the development of the central pathways in the presence of auditory neuropathy.

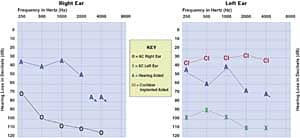

One of the goals of Sharma’s lab is to obtain a better understanding about the limits of brain plasticity. “In some of the auditory neuropathy children who have received a cochlear implant, we found that, when the subjects were matched for age, they were performing no worse than the congenitally deaf children who had been implanted. So, it’s worthwhile to note that some of these children with auditory neuropathy, after implantation, have the same performance results as other children implanted with CIs. But the question is, ‘What separates these children with auditory neuropathy from the kids who don’t do as well?’”

Lexical processing in children with hearing loss: All the words we know appear to be stored in a mental dictionary or “lexicon.” How is this information organized and retrieved when using words? Susan Jerger, PhD, and her lab have been looking at not only the meanings, but also the sound patterns, of spoken words. Her research examines two levels of language processing (phonologic and semantic) in children with normal or impaired auditory perceptual abilities.

Specifically, Jerger has been assessing children’s knowledge about the meanings and names of words with a cross-modal picture-naming task. She says that the patterns of facilitation and interference provide an implicit measure of children’s word processing, over and above the overt naming response. With this task, the content and organization of the “mental dictionary” in children can be evaluated.

“If you are asked to name a picture in the presence of a speech distractor,” explains Jerger, “the picture and the spoken word activate different processing sequences. The picture activates the semantic code first and the phonological code second, whereas the word activates the phonological code first and the semantic code second. For example, when a picture of a dog is presented, the concept is activated (eg, four legs, pet, barks, etc) which activates a set of meaning-related items from which you retrieve the correct word, “dog,” as the output. However, when the word “dog” spoken, a group of sound-related items is activated from which you select the correct alternative, which activates the concept (eg, four legs, pet, barks, etc).”

“If the onset of the spoken word is staggered in relation to the onset of the picture,” says Jerger, “we can cause different stages of the processing to overlap. For example, if you present a speech distractor well before the onset of the picture, there is no effect on picture naming. Then you gradually bring the onset of the distractor closer and closer to the onset of the picture, until picture naming is affected. Then you continue by moving the onset of the distractor until it lags the onset of the picture and picture-naming is no longer affected. This is what is meant by tracking the time course of semantic activation.

“What we’ve found,” continues Jerger, “is that this time course of activation is longer in some children with hearing loss than it is in children with normal hearing. In other words, it’s as if it takes them a longer period of time to select the correct word alternative.” Some children with hearing loss, according to Jerger, seem to develop normal lexical semantic representations. However, the dynamics of semantic processing appear to be slowed by the presence of early childhood hearing loss.

“I think this may prove to be important for theories on the role auditory input in word learning,” says Jerger. “Word learning depends on auditory input in young children, so it is interesting to understand better the role of auditory input in acquiring words in children with hearing loss and limited auditory perceptual abilities. Also, this research has the potential to offer wonderful opportunities to sharpening our ability to remediate and habilitate children with hearing impairment. It may also help us understand why there is such huge variability in benefit in amplification relative to the same degree of hearing loss.”

Electrophysiological markers of auditory processing disorder: James Jerger, PhD, and graduate students who make up the recently established Texas Auditory Processing Disorder Laboratory are conducting research on the underlying brain mechanisms responsible for auditory processing disorders (APD) in both children and adults. The research currently uses basic electrophysiology, particularly event-related potentials (ERPs) that are recorded from scalp electrodes.

“We’re recording these ERPs in response to target words that we’ve presented in various kinds of dichotic paradigms,” says Jerger. “We’re also looking at ERPs in relation to visual targets. The most promising avenue we’re pursuing at the moment is an analysis of temporal synchrony in the two hemispheres of the brain. We think—and hope—that this will lead us to an eventual biological marker of APD.”

Jerger says that one of the greatest current problems relative to APD is that its diagnosis is “dicey”; diagnosis is all based on behavioral measures that can be influenced by factors totally unrelated to auditory problems. “We need to find some other identifiers or landmarks, in the brain waves for example, that allow us to easily differentiate APD from an area that might otherwise mimic these same types of poor listening skills.”

Tinnitus research and treatment Although Aage Møller, PhD, has concentrated much of his efforts in the last few years to publications like his recent book, Hearing: Its Physiology and Pathophysiology (Academic Press, 2000), he has also continued his research on tinnitus and autism.

Specifically, he is interested in non-classical auditory pathways relative to tinnitus. It is thought that auditory information ascends to the brain in two parallel pathways, and Moller says one pathway has received very little research attention. He says this non-traditional pathway bypasses the primary cortex, and has strong and direct connections to the amygdala in the limbic system. (The amygdala is a mass of gray matter in the front of the temporal lobe that is believed to play important roles in emotional states, and it is connected to many areas within the brain.) Møller points out that patients with severe tinnitus often have depression. Although many researchers believe that these people are depressed due to the tinnitus symptoms they suffer from, Møller is not so sure: “It’s possible their depression could be associated with the amygdala. Many of these patients have phonophobia, with irrational fears about certain sounds…The non-classical pathways have a straight, direct route from the thalamus, and [unlike the classical pathways which are highly modulated] there is no way this signal can be modified. In other words, there is little or no control; it’s raw, unprocessed information that reaches the amygdala.”

Additionally, Møller is interested in the similarities between tinnitus and pain, because pain is also associated with the amygdala.

George Gerken, PhD, and colleagues have been working on concepts related to tinnitus and inhibition, and their work suggests that there are different types of tinnitus. He points out that most patients with severe tinnitus describe the location and characteristics of their tinnitus in ways that support the idea of significant involvement of the central auditory system.

Pursuing this concept of central involvement, the researchers have been using standard electrical recording techniques to sample various areas of the brain. They have found unusually large brain potentials coming from the auditory cortex in some tinnitus patients. “Now we’re concentrating on recently funded work in which we’re checking central auditory system gain to see if it is higher in people who have tinnitus.” Gerken is also chief of the Callier Tinnitus and Hyperacusis Clinic which has used tinnitus retraining therapy (TRT) on about 165 patients since 1996.

Identifying the Design Features of Speech vs. Noise: How do people separate speech from noise, particularly in reverberant settings? Peter Assmann, PhD, has focused his research on this question, and his work could conceivably lead to future noise reduction/separation strategies in hearing aids.

“Reverberation is a particularly nasty element of noise,” he says. “An important characteristic of speech is that, on certain measures, it has a very regular, statistically predictable pattern or temporal structure. For example, relative to pitch, the structure is a very rapid [eg, 5-10 millisecond] cue in vocal sounds. At the syllable level, where the syllables alternate, a slightly slower periodicity is produced on the order of a few hundred milliseconds—and these patterns help people lock onto the speech. In simplistic terms, reverberation tends to fill in the gaps between these cues.”

Because a lot of information is contained in the signal itself, Assmann is trying to identify some of the “design features” of speech that make it separable from noise and competing speech: “One of the experiments I’ve done looks at the perception of competing sentences presented as two people talk simultaneously. These voices start and stop talking at the same time, and the listener’s task is simply to write down all the words they hear. Then I use a vocoder [a kind of speech synthesizer] to systematically vary the characteristics of the two voices, looking at how differences in pitch, continuity, naturalness of the signal, etc, can influence speech perception.”

When two voices are simultaneously producing all the components that make up speech, says Assmann, those vocalizations will tend to fuse in perception if they are at the same pitch. In these cases, the information is essentially lost. “But as you separate the two pitches, listeners get progressively better at hearing sounds from separate voices.”

|

Education and Hearing Care The head of audiology at the Callier Clinic is Philip Lee Wilson, AuD, who is in charge of clinical services, supervising 12 staff audiologists. The clinic performs diagnostics, hearing aid dispensing and aural rehabilitation, as well as educational, industrial and cochlear implant services for the preschool, the Dallas Regional Day School of the Deaf, and other facilities in the Dallas area.

Wilson, Carolyn Musket, MA, and Carol Geltman Cokely, PhD, help drive the audiology program, which includes the AuD, PhD, and AuD-PhD degrees. All three audiologists play a role in teaching within the AuD program, and assist in establishing research protocols and maintaining the educational program. US News and World Report has consistently ranked the UTD/Callier audiology program in the nation’s top-20. The Callier Center preschool and elementary deaf education program is headed by Karen Clark, MA, who serves children between ages 2-5. Normal-hearing children enrolled in the preschool come from all areas of the community, while children with hearing impairments are placed through the Dallas Independent School District. “All of the children in the program learn to view each other as normal kids,” says Clark. “We have provided a preschool culture that is very open and accepting not only to different languages like ASL or to kids wearing hearing aids, but we also have a very large mix of cultures, with a lot of Hispanic and Asian families. Many of the parents who select us for their private preschool do so because of the multicultural setting we offer. They want to come here, and our program is accredited by the National Assn. for the Education of Young Children.” Student interns also gain experience in the preschool, working with educational audiologist Jacqueline Campbell, AuD.

In 2000, the preschool obtained a grant from the Texas Higher Education Coordinating Board to develop resource materials for health care professionals and families of infants with hearing loss. In connection with the Texas state UNHS law, an educational tool called Texas Connect Family Resource Guide and website (www.callier.utdallas.edu/txc.html) has been developed for parents and professionals in the counties that surround Dallas. Texas Connect offers a series of 12 “Topic Cards” that inform parents about a variety of issues; a “Parent-to-Parent” section that features the experiences of parents raising hearing-impaired children; advice on navigating the state’s health care and educational systems and other materials. |

In addition to these types of experiments, Assmann and Bill Katz, PhD, at the Callier Center have been looking at adult’s perception of children’s vowels. Children’s voices present an interesting problem for models of speech perception because they have high-pitched voices, and in addition, the frequencies of their formants are shifted upwards relative to those of adults.

“The human nervous system is very good at performing sound separation tasks,” says Assmann. “So that is the reason for developing models of perceptual analysis. Looking at the different kinds of signal representation and processing strategies can lead to new ideas [for separating speech from noise]. I’ve also been interested in trying to predict how well people will do in certain listening conditions, and also to predict what mistakes will lead to listening errors.”

Mapping in the Auditory Cortex and Brain Plasticity: Michael Kilgard, PhD, and his colleagues are exploring the basic principles of plasticity in the auditory cortex, with the hope of assembling these principles into a functional general theory of cortical self-organization. The research could lead to more groundbreaking ideas for helping people who have not appropriately recovered from a wide range of hearing losses.

Kilgard explains that his work is based on the tonotopic mapping of the auditory system: “If you train on some particular part of the frequency band, you’ll get better at tasks in that range, and that training is reflected in the improvement in the percentage of the cortex’s response for that stimulus. In other words, these maps exist, they’re highly organized, and they’re highly flexible…We’ve become interested in how humans learn something as complex as speech, and that has emboldened us to jump right in and train animals on complex tones. We then see how their brains change in response to this learning.”

Kilgard is observing rats to see how their auditory cortex neurons become “tuned.” Essentially, the rats are trained to respond to a complex set of tones within a series of different experiments which include the use of stimulating electrodes. The result is the production of highly detailed maps of the auditory cortex. The response properties of primary auditory cortex neurons are reconstructed from up to 100 microelectrode penetrations, and the reorganizations that result are among the largest ever recorded in the primary sensory cortex.

“Most of the hearing problems that hearing care professional see are the result of what happens after the brain has already solved—or is in the process of solving— most of the big processing problems. However, if somebody shows a deficit for neurological functions, chances are those problems are going to be much, much worse…For us, the diversity of benefit that people get from hearing remediation is a reflection of their training. But why are there such diverse results?”

Kilgard’s research has far-reaching implications. By determining how plasticity contributes to cortical representation, a number of disorders in which abnormal plasticity processes have been implicated (eg, tinnitus, epilepsy, Alzheimer’s disease, dyslexia) could be addressed. For example, Kilgard plans to use cholinergic modulation (the manipulation of acetylcholine, the main chemical driver of neurons in the brain) to accelerate functional recovery from head-trauma and stroke. He says that studies of cortical plasticity have already proven useful in designing treatment strategies for a number of neurological disorders, and it seems likely that a more complete understanding of this will facilitate the application of neuroscience principles in clinical settings.

Aural rehabilitation, FM systems, and auditory training: Several aural rehabilitation sessions at UTD/Callier have been developed by Linda Thibodeau, PhD, including the SIARC (Summer Intensive Aural Rehabilitation Conference) and a mini-version called SPARC (Spring Aural Rehabilitation Conference). Additionally, she has developed a series of AR classes for people purchasing hearing aids and for parents of children diagnosed with hearing loss.

Thibodeau has been researching the use of FM systems on infants and young children. “I am a firm believer in getting the best signal to noise ratio possible,” she says, “and I would put an FM system up against any digital hearing aid to hear in noise.” She recently finished a pilot project in which parents wore the FM transmitter and their young child wore the FM receiver. The parents reported increased vocalizations in their children, increased access, and said they were more likely to be providing appropriate language models because they were reminded by their microphone to do so. “I think there is a potential for the caregiver to gain more acceptance of the hearing loss and feel more a part of the process.”

Thibodeau is also involved in an NIH-supported grant, with colleagues from the University of Texas at Austin and the University of Kansas. The purpose of the project is to compare the benefits of computerized programs in children with language impairments.

Research From All Angles

It can be seen that there are many UTD/Callier hearing scientists working on a number of similar themes—but from widely divergent disciplines and perspectives. For example, the issue of why two patients, who have what appears to be the exact same kind of hearing loss, can experience different success with a CI is being addressed by at least a half-dozen researchers from behavioral, electrophysiological, and neuroanatomical contexts. This makes for a lot of fruitful collaboration, exchange of ideas, and intriguing conversation around the water cooler.

“Our long-term goal is to develop exceptional research and researchers,” says Ross Roeser. “With the paucity of students who are now identifying research as their primary area, I think UTD/Callier really has a unique role to play in audiology. We’re one of the few programs that can realistically offer a degree like the AuD-PhD, and our faculty and university focuses on both basic science and applied research. Thus, we have the capability to continue developing students who have a solid research background. The entire university, in fact, is a research institution. We have a lot of very bright, talented people here. I think it’s fitting that we don’t have a baseball team; we don’t have a basketball team; we don’t have a football team—but we’re number one in chess.”

And not far behind that in audiological research, education and hearing care.

|

A Top-Down Approach to a Bottom-Up Problem UTD/Callier Researcher Emily Tobey, PhD, points out that there remains great disparities between “star” cochlear implant recipients and those who don’t do as well. To better understand the factors underlying these differences, she and her colleagues have turned to the techniques of functional brain imaging. Using single photon emission computer tomography (SPECT), they are comparing the brain activity of both normal-hearing subjects and cochlear implant subjects when listening and watching a videotape. This SPECT is then contrasted to a situation when they are only watching a videotape with no sound present. Upon subtracting the SPECTs generated during these two conditions, the researchers receive a picture of those brain areas that are activated due to listening only. “We have people listen under many different conditions, including listening with the right ear, left ear, binaurally, using filtered speech, etc.” says Tobey. “We can also look at the implant patients preoperatively to understand how the cortex is responding to many signals that arise due to a compromised auditory system. Then, we can see what happens once they receive their cochlear implant, and how well their brain is ‘activated’ due to the implant. “One thing we’ve found is that, for users who receive less benefit from CIs but still wear them all the time, they tend to get only a contralateral (to the ear of implantation) excitation. There is minimal ipsilateral activation of the cortex in most of these subjects. Additionally, this group of users have excitation occurring in the primary auditory cortex and not necessarily in other areas of the brain that are known to assist in speech processing. We’re really excited about this. Future work will focus on trying to characterize what this means.” The obvious question, says Tobey, is what does one do in the event that a patient isn’t making adequate use of their implant? Tobey’s research focuses on both “star” cochlear implant users and those who don’t derive as much benefit. Her team is attempting to map auditory systems via DADRs, P300s and tasks involving mismatched negativity. The hope is to gain an electrophysiological measure of how well the patients are transmitting signals to the brainstem, then to contrast that information with what’s actually being received/processed by the cortex. Tobey and researchers at UT Southwestern Medical School have obtained a Dana Foundation Innovative Brain Imaging grant to look into devising non-traditional speech perception training or habilitation training programs for implant users who receive sub-optimal benefit from the devices. The research has some far-reaching implications. “One hypothesis,” says Tobey, “is that, if damage exists in the hair cells at the periphery, then essentially the neural architecture has been changed, so there are fewer auditory nerve fibers. This leads to a weaker, more impoverished speech signal. Another possibility is that, although there is a diminished auditory architecture, the signal may still be getting to the cortex. However, the cortex may not recognize that this diminished signal is actually a representation of speech. In that instance, the brain is receiving the signal , but it’s not doing the additional processing necessary for understanding to the signal or assigning meaning to it. That also could happen if the cortex were generally unresponsive to signals.” Pharmacological trials are currently being developed for trying different types of cortical stimulants in conjunction with traditional aural rehabilitation strategies to see if the cortical activity can be heightened so the signals could be recognized as speech. If this isn’t the case, and the pharmacological approach doesn’t work, the researchers might still be able to go back to DSP engineers and see if there is a way to devise a more robust DSP strategy for signal representation to these particular individuals. “This represents a real ‘top down’ approach to a ‘bottom up’ problem”, says Tobey. |

Acknowledgements

The Hearing Review thanks the UTD/Callier staff members who took time out of their busy schedules to be interviewed for this article. Special thanks is extended to Callier Director Ross Roeser, PhD, and Eloyce Newman, head of public information.