Tech Topic | September 2016 Hearing Review

This study shows that the benefits of audio streamed bilaterally via MFi to both hearing aids, visual cues through FaceTime, and extended bandwidth is significant. On average, this method of communication delivered a benefit of 77% in speech understanding on the phone for the normal-hearing and mild-to-moderately hearing-impaired individuals, and 72% for individuals with severe-to-profound hearing impairment. This is worth remembering when carrying out a phone conversation—whether you are in quiet or noise and whether you have a hearing impairment or not. Importantly, the benefits of using technology such as FaceTime or similar apps should be part of the hearing aid counseling and orientation. In some cases, the technology could make the difference that enables a patient to use a telephone.

Speaking to someone on the telephone in a noisy situation can be challenging for many people who use hearing aids. Using the phone successfully to communicate is important. Not only has it been linked to improved self-reported quality of life,¹ it is crucial to being able to successfully communicate at home or work. Study shows facetime windows 10 app is also helpful in aid.

Unfortunately, communicating via the telephone is difficult for several reasons.² One reason comes with the advent of mobile technology. Phone calls are increasingly taking place in noisy situations, such as in the car, walking down a busy street, or at train stations and airports. Another reason is the need for accurate manual placement of the telephone near to the hearing aid, as well as to maintain that placement during the call. Furthermore, traditional communication via the telephone takes place without visual cues.

Given the importance of speaking on the telephone, a number of solutions to overcome this challenge have emerged over the years: acoustic mode, telecoils, and wireless streaming. A number of hearing aids have a specific program or an acoustic mode that can be used that amplifies the bandwidth of the telephone (300-3400 Hz) while filtering out the non-transmitted sounds. While achieving some success with the use of the acoustic mode, this filtering solution still amplifies both the ambient sound and the speech without effectively improving the signal-to-noise ratio (SNR).

To overcome the challenges of amplifying both the speech and background noise, telecoils have been used. Telecoils are effective because the signal can be sent directly from the telephone to the hearing aid via inductive coupling without the need for the hearing aid microphone to remain on. Therefore, the SNR can be significantly improved. As an option, the telecoil can be used in the telecoil only (T) or telecoil and hearing aid microphone on (MT) modes so the wearer can decide if they wish to hear the ambient sound or improve the SNR depending on the situation or their preference.

While very useful for many people, the telecoil function is problematic for telephones that do not have strong magnetic leakage, and the hearing aid user must understand the correct orientation of the telephone for the best signal reception from the telephone.

ReSound has two wireless streaming solutions for cell phones, as illustrated in Figure 1. One wireless streaming solution uses an intermediate accessory (the Phone Clip+) to connect a Bluetooth-enabled telephone with the hearing aid. Communication with the telephone uses the standard Bluetooth communication protocol, and from the Phone Clip+ to the hearing aid using the ReSound proprietary protocol.

The other wireless streaming solution for the telephone provides direct streaming from an iPhone (5 or later series) to Made for iPhone (MFi) hearing aids. No intermediate accessory is required for this solution, although it does require that the hearing aid user speak into the phone’s microphone.

One of the advances of using smart phones has been the ability to carry out video calls. By using video call apps, such as Skype or FaceTime, an image of the person at the other end of the call can be displayed at the same time as the audio. This provides a potential opportunity for the hearing aid user to benefit from visual and auditory cues.

The inclusion of video information via visual cues is important, as it is estimated that visual information makes up approximately two-thirds of all communication.4 It was demonstrated in a previous study² with individuals with severe-to-profound hearing impairment that video calling with audio streaming to hearing aids results in greater speech recognition than without visual information. This same study showed an average benefit of 23% in speech understanding for people with severe-to-profound hearing impairment using wireless streaming via Phone Clip or MFi in both unilateral and bilateral conditions. However, this benefit has not been explored for individuals with normal hearing or those with mild-to-moderate hearing loss.

The aim of the present study was to investigate the potential benefits of wireless streaming technology with MFi hearing aids over a conventional acoustic telephone condition. Specifically, we examined the degree of benefit contributed by the streaming technology, the application of binaural listening, and the addition of visual cues.

Study Methods

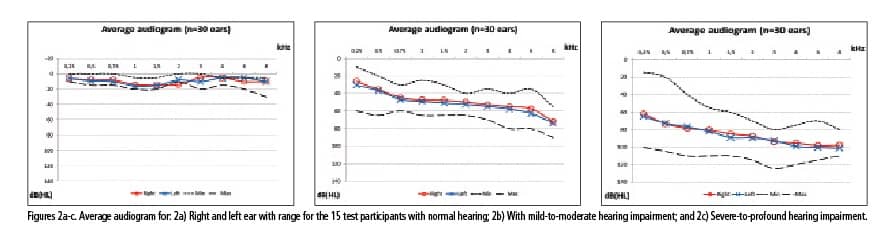

Test participants. A total of 45 adults including 15 people with normal hearing, 15 people with mild-to-moderate hearing impairment, and 15 people with severe-to-profound hearing impairment participated in this study. The average audiograms for the three study groups can be seen in Figure 2a-c.

The 15 individuals with normal hearing (8 males, 7 females) had a median age of 52 years (1st quartile: 49; 3rd quartile: 55). The 15 individuals with mild-to-moderate hearing-impairment (9 males, 6 females) had a median age of 76 years (1st quartile: 69; 3rd quartile: 80) and a median of 8 years of experience with amplification (1st quartile: 5; 3rd quartile: 15).

The 15 individuals with severe-to-profound hearing impairment (10 males, 5 females) had a median age of 77 years (1st quartile: 69; 3rd quartile: 79) and a median of 32 years of experience with amplification (1st quartile: 25; 3rd quartile: 39).

The results for the individuals with severe-to-profound hearing impairment have been published by the authors in the February 2015 edition of The Hearing Review.2

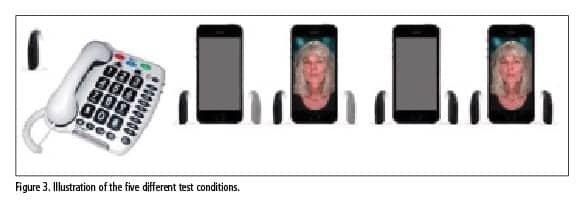

FaceTime is a video calling app specifically for Apple products. The listening conditions included were (Figure 3):

- Acoustic phone (unilateral acoustic coupling);

- Made for iPhone (MFi) direct streaming from iPhone to hearing aids (Bluetooth coupling);

- Unilateral without FaceTime;

- Unilateral with FaceTime;

- Bilateral without FaceTime, and

- Bilateral with FaceTime.

Speech was delivered through a landline phone receiver (Amplipower 40, Geemarc) coupled to the iPhone 5s headphone jack via a phone patch (Rolls), enabling the iPhone to also play speech through the acoustic phone for the acoustic phone condition. Prior to the test, each test participant was helped to find the ideal phone receiver placement by playing a constant signal through the phone receiver. Speech was streamed directly from the iPhone 5s to the MFi hearing instruments. The iPhone 5s was positioned at a comfortable viewing distance using a microphone boom.

Participants with normal hearing were fitted with ReSound LiNX 961 with an open dome and those with mild-to-moderate hearing impairment with a power dome. Participants with severe-to-profound hearing impairment were fitted with ReSound ENZO 998 with the test participants’ own closed earmolds. The hearing instrument microphones were activated bilaterally in all test conditions in order to make it more realistic, and as this is the default setting in the Aventa fitting software. The presentation level for each test condition was calibrated in a 2cc coupler in order to avoid gain differences affecting the speech intelligibility results. The test participants’ hearing instruments were fitted according to the ReSound proprietary gain prescription rule Audiogram+.

Materials. Testing was done using the Dantale I Danish audiovisual speech materials. Eight 25-word adult word lists and four 20-word pediatric lists were used. The speech was presented in a constant amplitude-modulated, speech-shaped background noise.5 The pediatric lists were used for unaided testing, and the adult word lists were used for the aided testing. The speech material presented in the audio-only conditions was band-pass filtered to match the frequency response of a telephone transmission (ie, 300-3400 Hz). The audiovisual material was not band-pass filtered, as the FaceTime audio bandwidth using an Internet network does not have the same limitation as a traditional phone network.

Procedure. Each test participant completed testing in the five different phone conditions. The order of test conditions for each participant was randomized using a Latin square, and was completed in two sessions separated by at least 2 weeks. Each session was initiated with two training rounds: one being the audio-only signal, and one being the audiovisual signal. This was done in order to accustom the test participants with both the audio and visual part of the material.

The aim of the test was to obtain a percentage correct score for each test condition. Speech and noise were presented at a constant SNR, which was the same for all test conditions. The SNR level at which the participant had approximately 60% correct with the audio-only signal was chosen in order to allow room for the participant’s scores in the other phone conditions to both improve and degrade. This would also allow room for demonstrating differences among the different test conditions, as we were interested in differences among the five test conditions rather than absolute performance.

Speech-in-noise testing, including setting the SNR level, was controlled through a specially designed MATLAB graphical user interface.

Results

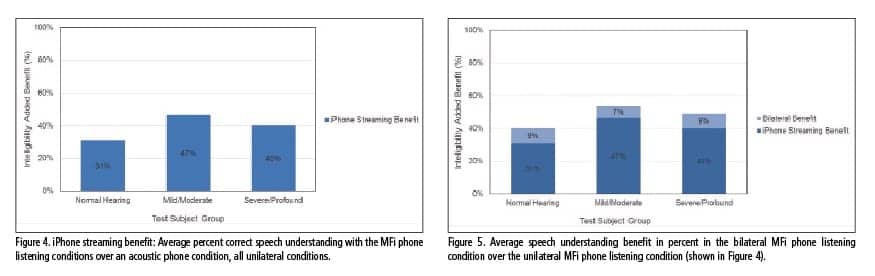

The results highlight the percentage of speech understanding benefit the study groups obtained in the four different iPhone listening conditions, as compared to the acoustic phone listening condition.

Comparisons performed with a Tukey’s honestly significant difference (HSD) reveal that the participants with mild-to-moderate hearing impairment obtain greater benefit than the participants with normal hearing (p<0.05). The difference in benefit obtained by the participants with severe-to-profound hearing impairment as compared to the participants with normal hearing is not significant (p=0.30). Similarly, the benefit difference between the participants with mild-to-moderate hearing impairment compared to the participants with severe-to-profound hearing impairment was not significant (p=0.53).

Therefore, all three study groups hear significantly better when the speech material is streamed as opposed to when it is delivered acoustically to the hearing instrument—even when only streamed to one hearing instrument and with the background noise being amplified through both hearing instruments’ microphones.

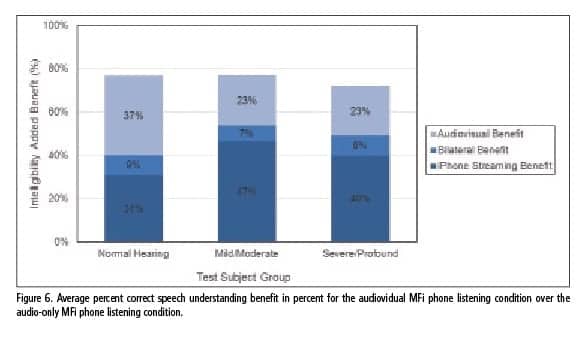

Speech understanding over the phone was tested with speech materials streamed bilaterally to the hearing instruments using the MFi direct streaming. Streaming the speech bilaterally provided an average additional benefit of 9% correct in speech understanding for the participants with normal hearing, an average additional benefit of 7% correct in speech understanding for the participants with mild-to-moderate hearing impairment, and an average additional benefit of 9% for the participants with severe-to-profound hearing impairment (Figure 5).

An analysis of variance shows that this benefit is significant (p<0.001) for all three study groups. There was no significant difference between the benefit obtained for the audio and audiovisual conditions (p=0.98). Furthermore, there was no significant difference between the bilateral benefit obtained by the study groups (p=0.79).

The three study groups, on average, obtained between 7% and 9% additional percent correct speech understanding with the audio signal streamed bilaterally to both hearing instruments, while at the same time having both hearing instruments’ microphones amplifying the background noise.

The benefit of the audiovisual condition compared to audio-alone is statistically significant according to an analysis of variance (p<0.001). There is no significant difference in audiovisual benefit obtained between the unilateral and bilateral conditions (p=0.98).

Comparisons performed with a Tukey’s honestly significant difference (HSD) reveals that the participants with normal hearing obtain significantly better benefit than the participants with mild-to-moderate hearing impairment (p<0.01) and the severe-to-profoundly hearing-impaired participants (p<0.01). There is no significant difference in the benefit obtained by the participants with mild-to-moderate hearing impairment as compared to the participants with severe-to-profound hearing impairment (p=0.997).

The three study groups on average understand 23% to 36% more on the phone when the audio signal is supported by visual information and extended frequency bandwidth, which is the case with FaceTime and in the unilateral and bilateral streaming condition. The participants with normal hearing obtain a significantly higher benefit from visual information through FaceTime and extended frequency bandwidth as compared to the two groups of individuals with hearing impairment.

The MFI with audio streamed bilaterally to both hearing instruments with visual cues and extended bandwidth through FaceTime provide an average benefit of 77% in speech understanding on the phone for the individuals with normal hearing and mild-to-moderate hearing impairment, and an average benefit of 72% in speech understanding on the phone for the individuals with severe-to-profound hearing impairment. This benefit is compared to using a phone monaurally with acoustic pick-up through the hearing aid microphone. The difference in overall benefit between the study groups is not significant (p=0.67).

Discussion

Speech recognition testing in this study showed that streaming the speech, instead of delivering speech acoustically from a phone monaurally, significantly improves speech understanding irrespective of whether the person listening on the phone has normal hearing, a mild-to-moderate hearing impairment, or as previously shown,2 a severe-to-profound hearing impairment. The reason for this improvement is likely to be twofold:

1) Placing the phone to optimize pickup of the phone signal during acoustic phone usage is challenging, and maintaining the correct position throughout the testing in this study—or, more importantly, during a phone conversation in real life—is even more challenging.

2) Streaming the speech signal to the hearing instrument provides a speech signal with an improved SNR as compared to the speech signal delivered through an acoustic phone.2

Speech understanding over the phone was also tested with speech materials streamed bilaterally to both hearing instruments using the wireless connectivity offerings included in the study. Streaming the speech to both hearing instruments, as opposed to one hearing instrument alone, provided an additional significant speech understanding benefit for both the participants with normal hearing, mild-to-moderate hearing impairment, and severe-to-profound hearing impairment. This finding is in agreement with other previous research showing a significant benefit when speech was transmitted bilaterally during phone usage in the presence of several different noise configurations. This benefit was attributed to the participants benefitting from binaural summation (or binaural redundancy) and binaural squelch.3

Adding visual information through FaceTime and extended frequency bandwidth provided an additional speech understanding benefit. The availability of visual cues is known to add to speech understanding6,7; the extended frequency bandwidth is expected to add benefit, as well. The participants with normal-hearing obtained a significantly higher benefit from visual information through FaceTime and extended frequency bandwidth, as compared to the two groups of individuals with hearing impairment. The participants with normal hearing are likely to achieve more benefit than the individuals with hearing impairment due to it being easier for them to “fill in the gaps” when receiving this extra input.

When summed together, the benefit participants received with the MFI with audio streamed bilaterally to both hearing instruments, visual cues, and extended bandwidth through FaceTime was significant. On average, this method of communication delivered an average benefit of 77% in speech understanding on the phone for the normal-hearing and mildly-to-moderately hearing-impaired individuals, and 72% for individuals with severe-to-profound hearing impairment. This is worth remembering when carrying out a phone conversation—whether it is in quiet or noise, and whether you have a hearing impairment or not.

Importantly, the study shows that the benefits of using technologies such as FaceTime or other similar apps should be part of hearing aid counseling and orientation.

Conclusions

Today’s advances in hearing instruments and cell phone technology provide users of hearing instruments with several options for improving speech understanding over the phone. These options include streaming and the use of visual cues.

Streaming a speech signal increases speech understanding compared to delivering it acoustically via one hearing aid. Streaming speech bilaterally to two hearing instruments instead of unilaterally to one provides improved audibility due to binaural loudness summation. The addition of visual cues and extended frequency bandwidth through FaceTime also increases speech recognition.

Everyone, regardless of whether they have a hearing loss or not, can benefit from these options. However, this benefit is especially significant for those who do have hearing loss, since their absolute performance is worse than normal-hearing.

This technology can mean the difference between being able to use the phone and not being able to use it at all for those with severe impairment. And, for those with milder losses, this technology can enable them to use the phone in conditions they otherwise could not.

Other key points that hearing care professionals can take away from this study include:

- The MFi phone handling strategies provide a significant speech understanding benefit compared to acoustic phone, even in the unilateral condition. This applies to both individuals with normal hearing and hearing impairment.

- A bilateral phone handling strategy provides a significant speech understanding benefit and in both the audio only and audiovisual condition. This applies to both individuals with normal hearing and hearing impairment.

- A visual phone handling strategy (MFi direct streaming) provides a significant speech understanding benefit to both individuals with normal hearing and hearing impairment.

References

-

Dalton D, Cruickshanks KJ, Klein BE, Klein R, Wiley TL, Nondahl DM. The impact of hearing loss on quality of life in older adults. Gerontologist. 2003; 43(5):661-668.

-

Jespersen CT, Kirkwood B. Speech intelligibility benefits of FaceTime. Hearing Review. 2015; 21(2):28-33. Available at: https://hearingreview.com/2015/01/speech-intelligibility-benefits-facetime

-

Picou EM, Ricketts TA. Comparison of wireless and acoustic hearing aid-based telephone listening strategies. Ear Hear. 2011; 32(2):209-220.

-

Gamble TK, Gamble MW. Interpersonal Communication. Thousand Oaks, Calif: Sage Publications; 2014.

-

Elberling C, Ludvigsen C, Lyregaard PE. DANTALE: A new Danish speech material. Scand Audiol. 1989;18: 169-175.

-

Tillberg I, Ronneberg J, Svard I, Ahlner B. Audio-visual speechreading in a group of hearing aid users: The effects of onset age, handicap age and degree of hearing loss. Scand Audiol. 1996;25:267–272.

-

Tye-Murray N, Sommers MS, Spehar B. Audiovisual integration and lipreading abilities of older adults with mormal and impaired hearing. Ear Hear. 2007;28(5):656-668.

Original citation for this article: Jespersen CT, Kirkwood B. Speech Intelligibility Benefits of FaceTime: Advantages for Everybody. Hearing Review. 2016;23(9):20.?