Part 1: The Evolution of Advanced Hearing Solutions

The implementation of digital technology in hearing instruments has radically changed the face of the hearing industry and the way in which hearing instruments are dispensed. Since their introduction in 1995*, digital instruments have played a decisive role in the recent consolidation of the industry, research and product development activities, and the livelihoods of dispensing professionals. Today they represent about one-third (28.6%) of all hearing instruments dispensed.1 (*Editor’s note: It should be acknowledged that the Nicolet Project Phoenix digital hearing instrument was initiated in 1984, but the resulting instrument in 1989 was never made available commercially.2)

Christian Berg |

Victor Bray |

Francis Kuk |

Pierre Laurent |

Thomas Powers |

Jerome Ruzicka |

Donald Schum |

The Hearing Review asked seven industry experts on digital hearing instrument technology to participate on a “virtual panel discussion” and speculate on where digital technology has been, where it is today, and where it is headed for the future. Participants included Christian Berg, product manager for Phonak; Victor Bray, vice president of auditory research for Sonic Innovations; Francis Kuk, director of audiology for Widex Hearing Aid Co; Pierre Laurent, director of product marketing for Intrason France; Thomas Powers, director of audiology and strategic development for Siemens Hearing Solutions; Jerome Ruzicka, president of Starkey Laboratories; and Donald Schum, vice president of audiology for Oticon Inc. As with any speculation on the future, it was recognized during these interviews that their comments represented “best guesses” about some of the aspects pertaining to the present and future directions of digital instruments, and they were encouraged to provide candid and even wild ideas on what might be expected in the next 10 years and beyond.

The Evolution of DSP and Sophisticated Product Features

Many people in the hearing care field contend that the capabilities of digital instruments have surpassed the audiological knowledge required for the optimal implementation of the technology. Therefore, a frequently mentioned criticism of DSP instruments is that many of the devices essentially emulate the functions of advanced programmable analog devices. Most of the experts interviewed in this article agreed that this criticism was somewhat true for earlier-generation DSP aids; it was a necessary step in the evolution of the product class. “When DSP was first introduced as a useful technology in hearing aids,” says Jerry Ruzicka of Starkey, “the outcome of the processing was similar to what was available from a programmable platform. I think we’re just now seeing the real benefits of DSP emerging in the marketplace. We’re beginning to do things with digital technology that simply cannot be done in the analog realm. The first success was getting DSP to work with the 1V battery, and now the true processing power of the technology is emerging.”

“In some cases, the comment that manufacturers digitally implemented analog functions is a valid one,” says Victor Bray of Sonic Innovations. However, he is quick to add that even early versions of DSP allowed the implementation of more technological features into an equivalent package (physical size and energy drain) than analog instruments. “It’s true that there have been digital instruments released that had as their core signal processing the equivalent of an analog programmable aid. Even today, there are WDRC digital chips that are very similar to the previously proven two-channel WDRC analog chips of the 1990s. On the other hand, there were also many other product introductions that represented significant departures from the companies’ prior analog products. The point is that the sophistication level in digital products varies. So, it’s true that some of the DSP instruments are digital implementations of older analog designs. But the new generation of digital aids are high-sophistication units that are truly different from anything found in the analog domain.”

“An important point to understand,” says Donald Schum of Oticon, “is that, when we moved into the digital realm, we did not change the core audiological purpose of fitting hearing instruments: to use multichannel, nonlinear processing as a solution for sensorineural hearing loss (SNHL). That was a solution developed in the early 1990s in analog programmable aids and, as we moved into digital platforms in the late-1990s and 2000s, that core purpose has remained the same. When you look at virtually all high-performance products, they are exclusively multichannel, nonlinear processing devices. In some ways, it really is taking what we had in analog platforms and moving it to digital. However, what has changed are the enhancements that are possible because of the technology platform, including enhanced shaping, compression, feedback, occlusion and noise management, directionality, and other functions.”

“Of course digital hearing aids are duplicating analog functions,” says Pierre Laurent of Intrason France. “[The basic concepts behind] selective amplification and compression have been used for years and are necessary for successful fittings. Digital technology allows the setting of more precise response curves and more precise loudness equalization. To simplify, if [hearing loss] problems are fixed within a fitting, the end-user has an excellent chance to hear well in quiet. But they may also suffer from other troubles like reduced frequency selectivity, temporal resolution, temporal integration, troubles of pitch perception and frequency discrimination, and sound localization [especially when trying to hear in noise].” Laurent says these problems are currently being studied by psychoacousticians and audiologists, and there is continued understanding of those problems. “Before DSP technology was available, this knowledge could not be used by the manufacturers. DSP technology will permit us to develop strategies to compensate for these troubles one by one.”

Audiological Alchemy: Solving Age-Old Problems

Particularly with regard to the hearing in noise, there was a belief on the part of consumers and even some dispensing professionals that DSP would be a panacea for improving signal-to-noise ratios (SNR). “I think the biggest negative, in terms of expectations,” says Schum, “was the realization of the professional community that, although we had moved into the digital platform, the instruments still did not solve the speech-in-noise problem. The limitation was not that we’d moved onto the DSP platform, but that an effective algorithm that could separate speech signals and real-world noise did not exist.”

“I don’t believe it has been clinically proven that any DSP algorithm alone can improve the signal-to-noise ratio, thus improving speech intelligibility in noise without the aid of some type of directional system,” says Ruzicka. “But I still believe there is opportunity for that to occur in the future. There are many things we can do relative to intelligent features that allow the hearing aid to know when it’s in the presence of speech or noise, and adjusting the instruments signal processing accordingly. Even if we are only making things more comfortable for the patient, that person will almost certainly receive benefit. However, the ability to subtract the noise, separate the speech from noise, and thus change the signal to noise ratio is still ahead of us.”

Bray disagrees, pointing out that three recent studies have demonstrated that a Sonic Innovations digital aid improves SNR without the use of a directional microphone (see sidebar).

In truth, it was not a huge leap of faith for dispensing professionals to have had high hopes for an immediate digital solutions to hearing in noise. Decades of hearing industry and acoustical research, using large computers and/or various microphone systems, had produced hopeful results. “The majority of research on digital signal processing in the past 30 years involved the enhancement of speech in noise through various techniques,” says Francis Kuk of Widex, “so when digital hearing aids were released, many people automatically assumed that they would improve speech in noise…[People] believed that, because it’s digital, you could manipulate the signal in any way. But the research done on DSP in the past 30 years has been performed on huge computer systems and not on wearable hearing aids. When we first introduced our digital aids 5-6 years ago, they had to be introduced in wearable sizes; the lesson is that size still does matter in terms of processing capacity. The digital hearing aids of 6 years ago were necessarily limited in what they could, and could not, do even though they were applying 100% digital processing.”

“Digital technology is not the same as fully programmable,” says Christian Berg of Phonak. “The real advantage to digital instruments is the ability to go in and change the performance of the existing instrument. Digital has brought a lot to the table, but it hasn’t changed the fact that we are amplifying and compressing and expanding signals.”

Digital processing also introduces other artifacts that can cause problems. “Digital signal processing has a different set of issues that can cause problems,” says Bray. “Going digital may introduce noise, degrading the signal-to-noise ratio (SNR). This is due to aliasing errors (low-frequency noise), imaging errors (high-frequency noise), and quantization errors (raises noise floor). In addition, going digital introduces a processing delay (group delay) ranging from 1-10 milliseconds. If the digital implementation is properly done, these factors are insignificant, but if poorly done, the introduced noise and longer delay times will significantly degrade the sound quality.”

|

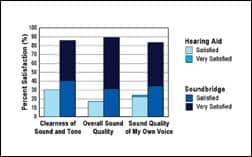

Are Hearing-in-Noise Tests Fair Enough? While an abundance of subjective clinical data shows a preference for digital hearing instrument usage in noisy environments, little objective test data supports this claim. However, a persuasive argument can be made that current hearing-in-noise tests may not be sensitive enough or may only be telling part of the story. There are essentially two points to this argument. The first is that the tests, like the Hearing in Noise Test (HINT) and Speech in Noise (SIN) test, assess the hearing of people in a specific laboratory-created environment, which may not reflect the wide range of listening settings for the average hearing instrument user. The second point is that there is probably a lot more to hearing in noise than simply speech intelligibility; comfort, reduced fatigue, and other ergonomic factors may play crucial roles in a person’s ability to hear over the course of a day in many different listening settings. When asked about the sensitivity of the tests, Francis Kuk says the current speech in noise tests may not reflect the entire picture. “I think there are two issues,” says Kuk. “One is whether a DSP aid is capable of providing a speech-in-noise benefit. In other words, has a manufacturer developed the kind of algorithm that can accomplish this task? The second issue is the objective tests themselves, and my personal opinion is that I don’t think the tests are sensitive enough. Just think about it: you might spend 20 minutes in the lab with a particular kind of speech test. You have to have a lot of faith in your test methods and rationales to expect a patient to give you a full, accurate description of the benefit of the hearing aid. What kind of test condition is capable of assessing all the environments that the listener encounters? We’ve seen this [problem] in the lab. For example, we may use the SIN test, and the results suggest that there is a 5% benefit [when using the noise reduction algorithm], but this difference is deemed statistically insignificant. And yet, when we test subjects in a real-world environment, the majority of them say, ‘Gee, I can certainly hear better in Situation X and Situation Y.’ ” Kuk says that there are not enough test conditions, and the existing test conditions may not be specific enough to the patients’ environments and needs. “People are wearing hearing aids for days before they can tell how good or bad the devices work for them,” he says, “and yet in 20 minutes we expect lab tests to conclude how well the hearing aid noise algorithm works.” A related issue, says Thomas Powers of Siemens, is in trying to interpret what the test results actually mean for both dispensing professionals and consumers. He explains that the HINT, for example, is a fairly sensitive test that focuses on the 50% speech intelligibility level. The test has the ability to assess patient performance in different SNRs and with different noise sources. However, the product performance differences can be relatively small, and interpreting the test results can be difficult. “The problem is that those performance differences focus on the 50% intelligibility level,” says Powers. “So, if you get a 2 dB improvement and that translates into somewhere around 10%-20% speech intelligibility improvement, what we have to make clear is that the improvement is when the user is listening at the 50% level. If you put that same patient into an environment where they’re already hearing at the 80% level, the benefit might be gone. Or, if you put them in an environment that is more challenging, the improvement may not be there because the signal becomes inaudible. So, in some ways, it’s difficult for us to use the testing tools we have and translate the results into some understandable measure of benefit for consumers.” Powers also says it may not be completely fair to compare performance across products in only a few specific environments or SNR settings. “The question is, Does the test result translate into a benefit in the real world where the patient is encountering a multitude of environments and may never again encounter the specific test environment found in the lab? …The HINT is a good measure at the 50% level.” Powers notes that this may be a problem endemic to tests of speech intelligibility. For decades, researchers have tried to find the patient’s maximum hearing level and then see how much improvement they can get with a new device. A 2-6 dB difference at the 50% criterion level might be seen as a huge improvement with the product in that specific environment. But when the subject emerges from the test booth and encounters a variety of other challenging listening situations, they may not view the difference as earth shattering. Donald Schum points out that noise tests may also provide a very narrow metric of the performance of DSP products and tend to oversimplify the issue of hearing in noise. “There has been a tremendous number of other advances that digital products have brought that will not show up on a speech and noise test. Things like the flexibility of the product, the ability to match different processing needs on the part of the patient, the ability to implement directionality and feedback routines very effectively, and a variety of other dimensions, will not show up in a sound booth test of speech in noise. As far as comfort and a variety of other ergonomic types of measures, there have been great gains made. So I think it’s a matter of looking at satisfaction issues of the patients. I think if you look at some patients who have lived through the various generations of hearing instrument technology, in terms of their happiness with the products, we believe that aspect has advanced significantly throughout the industry.” Christian Berg agrees: “I think the criticism of the testing procedures are fairly well-founded. The only proven way, so far, of fighting noise is to prevent it from entering the circuit in the first place [via directional microphones or the use of FM]. Obviously, with many of the strategies, you can’t get rid of the noise completely, but it may be wiser to describe the effect of the processing as listening comfort. If you have noise mixed with the desired speech or music signal, you may not be able to get rid of the noise, but you certainly can reduce its impact on the listener. There are a number of very nice algorithms out there doing a lot of things to the sound to make it easier to listen to. And that’s not an insignificant issue.” While Victor Bray agrees with some of the above positions, he says that, if hearing improvement in noise can be demonstrated in an extremely adverse condition like the HINT, it is highly probable that benefit will also be experienced in other situations. He and colleague Michael Nilsson recently published data suggesting that the Natura 2 SE hearing instrument is capable of speech-in-noise improvement on objective test measures independent of directional microphone benefit (see November 2000 HR, pages 60-65 and December 2001 HR, pages 48-54). The benefit with the custom aids was independently verified at the Washington University School of Medicine and is currently being evaluated in a second replication study at the Cleveland Clinic, according to Bray. |

Surprises in Digital Technology

There have also been several pleasant surprises with the implementation of digital technology in hearing instruments. Particularly in the areas of anti-feedback strategies, directionality and the control of attack and release times, digital technology has opened new possibilities.

Schum believes that the industry is now seeing the general effect of what is being experienced in all digital product types: namely decreasing chip sizes, increasing processing power, and decreasing battery drain—all of which allow for more complex processing. “We’re also seeing better algorithms starting to hit the market,” says Schum. “In particular, we are starting to see some very good anti-feedback algorithms being developed and presented. There hasn’t been a sea change in technology in moving into digital technology, but rather general expected improvements in several technical aspects…I also think the value of directionality caught some people by surprise. I think that moving onto a directional platform offered some unique and exciting possibilities for applying more effective directionality.”

Berg points to the dramatic change in designing products for the hearing care market. “I think the surprise that many people got was that there is a difference between the time domain and frequency domain, and also in the way these parameters behave,” says Berg. “Additionally, in an analog instrument, the sound quality is determined primarily by the architecture of the signal path; in the digital domain, you set up that signal path as you design the product. There is so much more you can do—good and bad—to the signal path in the digital domain compared to analog…In the analog domain, you’re configuring a number of amplifiers and filters, and they perform well in more-or-less all the settings and will give you a different overall performance. But you are not recreating or resynthesizing the sound as is the case with digital.”

The popularity of digital instruments, while expected by many, is still impressive. “When I first joined the industry in the early ’90s,” says Kuk, “we were making presentations on programmable aids, and less than 10% of all hearing aids were programmable for most of that decade. Then, all of a sudden, when digital hearing aids were introduced, programmables and digitals each grew to about 30% of the market within 5 years. That’s a substantial change in the way professionals are fitting patients. Something is right with digital aids, and there is obviously some benefit the digital hearing aids have provided.”

The acceptance of digital hearing instruments is even more pronounced in some European countries. “DSP instruments are 82% of the French market, according to the French hearing aid manufacturer association,” says Laurent. “The technology has switched from first- and second-generation DSP hearing instruments, which largely duplicated past and present analog functions with minor improvements, to third-generation DSP that allows new signal processing such as noise reduction, feedback management, and the first large steps in spectral and temporal enhancements.”

This rapid acceptance and technological progress has brought several in the industry to speculate on the future of non-digital instruments. “Let’s face it,” says Berg, “programmable instruments were just an intermediate stage in hearing aid development. [They existed] because we couldn’t make a digital at that time, and we needed to put more functions into the instruments for enhanced functionality for the user and the fitter, as well as to reduce the number of different products for manufacturers. We’re now capable of placing a number of different processing schemes into each program. We’ve come to a point where we can do practically whatever we want; we just have to be more specific in determining [audiologically] what we want. The technological limitations aren’t gone, but they are no longer as significant as they once were.”

Powers says that he, too, is surprised at how quickly DSP has grown in popularity and, at the same time, taken on many different product variations: “I think the implementation of so many different amplification strategies across all the manufacturers has been the biggest surprise. I wouldn’t have guessed that there would be so many variations on how manufacturers are approaching their designs and their strategies. And all of these are based on fairly sound audiologic and scientific literature. For example, release times are implemented in widely diverse ways because there is literature that supports at least a couple different strategies. And all the products have contributed to the success of DSP advancements in certain categories. Therefore, one of the surprises is that the industry still hasn’t determined the best strategy for everyone. In a way, this is a good thing, because it continues to focus our endeavors on the pure research, as well as the applied research, that’s needed to find answers to some fundamental questions.”

The New Era of Audiological Rationales

Despite the wide variety of amplification strategies, the global objective for any advanced hearing instrument may sound like a well-worn mantra to those who have used analog WDRC hearing instruments for the past decade: to make soft meaningful sounds soft, to make loud sounds loud but not uncomfortable, and to make conversational speech sounds intelligible in as many environments as possible. Boiled down to the essentials, most manufacturers would agree that input-specific gain should be selected using clinically validated fitting algorithms (DSL, NAL-NL1, proprietary algorithms), directional microphone technology should be utilized whenever appropriate, and WDRC should be implemented for the majority of patients in enough channels to maximize audibility and intelligibility. Many digital instrument manufacturers might add to this list features like noise reduction, adaptive feedback and occlusion controls, spectral enhancement, microphone matching, etc.

While these may sound like reasonable goals, achieving the above objectives involves many different features and amplification strategy pathways. The design considerations in one aspect of a hearing instrument may greatly affect another performance aspect of the aid. “All digital hearing aids are multichannel compressors,” says Bray. “However, all of the leading-edge products are clearly differentiated in their multichannel architecture (the core signal processing). This is the key differentiating feature today, and will be for the foreseeable future. Key factors in the competition will be the number of compression channels, the channel bandwidths and filter slopes, the attack and release time constants, and integration of advanced features such as spectral subtraction (for noise) and spectral enhancement (for speech) with the core signal processing.”

In the opinion of the people interviewed in this article, the introduction of more effective amplification algorithms will soon supercede most of the current digital hardware issues. “Hearing instrument hardware will soon be a secondary issue,” says Ruzicka. “The key for hearing aid technology in the future lies in the development of the signal processing algorithms. What we’ll see in the next few years can be described as a software effort. The algorithms developed by the researchers, which can be implemented within the DSP hardware, are what will really bring benefit to patients. For the past five years, it has been a ‘hardware game.’ Just as the hardware in your PC isn’t nearly as important as it was a few years ago, the parallel can be drawn with hearing aids; hardware will become secondary.”

“It is true that the amplification rationales will become a more and more important factor,” says Laurent. “It is our mission to provide the dispenser with rationales that will be efficient at the first fit so that he/she will have only to fine tune the fitting.”

“We are in a stage right now where, because of the nature of digital technology, each company has to outsource its own digital processing chips and make decisions about the architecture they are going to use,” says Schum. “A completely open-platform digital chip that can do anything after it’s manufactured doesn’t exist at present. Because of that, it’s incumbent on manufacturers to decide what the architecture will be and establish, from an audiological standpoint, the purposes of the product. In this way, the audiology and the technology grow up together within that product. That will eventually change when the digital processing chips will be so small, the processing power will be so high, and battery drain will be so low that we will be able to independently develop fitting and processing algorithms separate from the technology and bring the two together after the fact. When that’s the case, we’ll probably see a slightly different shakedown in the market because, at that point, you can have companies and groups of researchers who are just developing processing algorithms and either selling them to the highest bidder or publishing them as a scientific finding.”

With the industry’s continual movement away from the “hardware game,” it may also be incumbent upon dispensing professionals to understand more about how each of the DSP products operates and makes processing decisions. “I think it’s important for professionals to understand the audiological rationale of the company,” says Powers, “because it sets the direction in which that product is trying to take you. But I think it’s possible to distill all the products down to a few key factors. First, we need to determine the frequency gain response based on the audiogram. Second, we need to have a valid fitting method, like DSL, NAL, FIG6 or other established algorithms. Third, we’re now seeing some directional microphones in all or most DSP aids along with some kind of compression strategy which includes gain management and attack/release times. Fourth, all digital aids have incorporated a noise reduction strategy. So within these features—frequency-specific gain, the fitting algorithms, the directional mic system, the noise reduction strategy and the compression system—lie the primary distinctions that define all the diverse products we have in the industry.

“If the dispenser focuses on these broad categories,” continues Powers, “and understands why the instrument is adjusting the frequency-specific gain in a certain way, or why it uses a particular compression or directional microphone strategy, then the rest of the fitting becomes a lot more manageable. The overall picture should be kept in mind when fitting the instruments and adjusting the parameters. As I said, each of the manufacturing companies offer something different in each of the categories. So it becomes a matter of which rationale the dispenser feels makes the most sense and is comfortable with, which software is easiest and more intuitive to use, as well as the other more traditional selling points like product support, price, etc. But understanding the four major categories will help the dispensing professional in assessing the different products on the market and getting a grip on what each is trying to accomplish.”

Part 2 of this article examines possible future developments in DSP instruments.

References

1. Hearing Industries Association. Quarterly statistics report. Alexandria, Va: Hearing Industries Association;2001.

2. Heidi V. Digitally programmable hearing aids: A historical perspective. Hearing Review. 1996; 3 (2): 20-22.