|

This article was submitted to HR by Jennifer Groth, MA, director of Clinical Audiology at ReSound Research and Development, Glenview, Ill; John Nelson, PhD, vice president of Audiology at ReSound North America, Bloomington, Minn; Charlotte Thunberg Jespersen, MA, a research audiologist in the Department of Clinical Audiology at GN ReSound A/S in Taastrup, Denmark; and Laurel Christensen, PhD, vice president of Research Audiology, Glenview, Ill. Correspondence can be addressed to John Nelson at . |

Wide dynamic range compression (WDRC) was introduced in the first commercially available hearing instruments in 1989 by ReSound. This technology provided automatic gain adjustments based on the intensity of the input signal. For low-intensity or soft listening situations, increased gain was automatically provided to increase audibility. For high-intensity or loud listening situations, the hearing instrument gain was automatically reduced for comfort.

However, it is clear that individuals also may prefer different gain values based on variables other than input level. The Environmental Classifier, a feature introduced in the ReSound Metrix hearing instrument and now available in Azure hearing aids, analyzes the input signal to classify it into one of seven different listening situations. The Environmental Fine-Tuner can then be used to have the volume (or gain) adjusted based on the listening situations. In this way, the overall gain for loud speech can be different than loud noise.

In addition, ReSound Azure instruments with a volume control have an Environmental Learner option where the automatic volume adjustments are based on the wearer’s volume control use.

This article examines the need for noise management systems and seeks to place the rationale, research, and benefits of ReSound Azure Environmental Optimizer into perspective for hearing care professionals.

The Importance of Noise Management Systems in Hearing Aid Acceptance

It has been established that improved speech audibility and understanding does not guarantee that individuals will accept their hearing instruments. Hearing instrument users must accept that all sounds are amplified, not just those that are pleasurable or of interest. Hearing instruments are not selective in amplifying sounds based on the individual preferences. Nabelek1 and her colleagues have even proposed that successful hearing instrument usage might be determined more by a wearer’s willingness to listen in background noise than how well they understand speech.

|

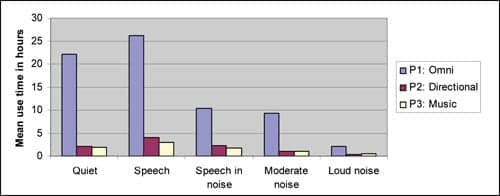

| FIGURE 1. Average logged data for five different listening environments per program during a 1-week period for 19 listeners fit with a multi-memory hearing instrument. From Nelson et al, 2006.8 |

It makes sense that a person’s acceptance of background noise depends largely on the individual wearer and this can play a role in successful hearing instrument usage. All listeners have conditioned responses to certain sounds. Most people would consider the sound of a dentist’s drill to be annoying and a child’s laughter to be pleasurable, even if these two sounds were presented at the same intensity level.

However, music is one type of auditory stimulus that has been demonstrated to evoke powerful emotional responses. Blood and Zatorre2 found that, when listeners heard music they had selected themselves, blood flow increased to parts of the brain that react to euphoria-inducing stimuli such as food, sex, and recreational drugs. Different patterns of cerebral blood flow were observed in response to deliberately dissonant, unpleasant music,3 supporting the idea that sounds may be tolerated differently among individuals depending on their emotional associations.

To explore the idea that differences in tolerance for background noise may be a primary determinant for acceptance and use of amplification, Nabelek et al1 developed the Acceptable Noise Level (ANL) procedure for determining the level of background noise that individuals find acceptable when listening to speech. The ANL expresses (in decibels) the relationship between the level of running speech at the most comfortable listening level and the maximum acceptable level of background noise. A low ANL indicates acceptance of higher background noise levels and appears to be a unique listener characteristic unrelated to age, gender, or severity of hearing loss.

In a more recent study of 191 hearing aid owners, unaided ANLs were predictive of an individual’s success with their devices with 85% accuracy, whereas speech-in-noise scores and other listener characteristics were not found to be related to hearing aid use.4 A less encouraging finding was that, of the 191 listeners evaluated, only 36% were found to be successful (ie, they would use their hearing aids when needed). The investigators pointed to hearing instrument noise management technologies as important to promote success with amplification.

|

| TABLE 1. Environmental Optimizer settings are applied depending on hearing loss severity. The fitter can override these settings for the individual listener. |

Solutions for Listening in Noise

VC versus ASP. Hearing instrument wearers 15 to 20 years ago had only one option for reducing annoying background noise: they could turn down the volume control (VC) wheel. They could also increase volume in situations where they found the sound to be too soft.

|

| FIGURE 2. Sliders for seven listening environments can be adjusted for individual volume preferences. |

As the popularity of nonlinear amplification grew during the 1990s, the number of patients fit with hearing instruments having volume controls has decreased by more than 20%.5 While this figure includes completely-in-the-canal (CIC) devices, the standard configuration for other styles of hearing instruments also has tended to be without volume controls. The rationale for leaving off this feature was that the hearing instrument would use automatic signal processing (ASP) to adjust the gain to the ideal level based on the input sound level, thereby eliminating the need for VC adjustments. An additional benefit was that devices could be made smaller and more cosmetically appealing without the volume control. Despite these advantages, many hearing instrument wearers expressed a desire for a volume control or were dissatisfied because their devices did not have one.5-7

Clearly, the presumption that one set of hearing aid parameters will meet the listening needs of an individual in all conditions is not met. The goal of all hearing instrument fittings is to provide amplification for optimum speech understanding while ensuring comfort for loud sounds. Even if this prescriptive fitting goal is generally achieved, it would not take into account that individual wearers might want to enhance or diminish different aspects of the amplified sound in different situations. For example, a hearing instrument wearer might desire more volume than prescribed in an important meeting at work, but wish for less volume when relaxing with the newspaper on the train ride home. The wearer with low acceptance for background noise levels (ie, a high ANL score) has no recourse but to turn off the instrument when the sound becomes overwhelming, thereby risking missing out on important sounds.

Multimemories. Using multi-memory hearing instruments with settings for specific listening situations has been another option to address the issue. However, the magnitude of listening situations encountered in daily life would imply an unmanageable number of programs.

|

| FIGURE 3. High accuracy of the Environmental Classifier. Adapted from Tchorz, 2006.9 |

Additionally, many users appear not to regularly and consistently use the programs made available to them. Nelson et al8 reported on a group of successful hearing aid users whose average use-time was 10 hours per day for Program 1 versus less than 2 hours for Programs 2 and 3 in a multi-memory hearing instrument with datalogging. Figure 1 shows the average number of hours these 19 participants spent in each of the three programs over a 1-week period as a function of listening environment.

Not only did they spend most of their time in the default omnidirectional program, the breakdown of listening environments does not indicate that the alternate programs were consistently used for specific types of listening environments. The hearing instruments in this study did have volume controls, and while usage of this control was modest, situational volume preferences that differed from the prescribed setting were observed for some of the participants. For example, 5 subjects tended to increase volume in speech environments, and 6 listeners tended to turn the volume down in noisy environments. Other participants may actually have preferred different volume settings in different situations, but may not have wanted to manually have to make the adjustment.

Natural Listening Balance

One way to account for situational preferences in volume without requiring action on the part of the wearer is for the hearing instrument to do so automatically. The Environmental Optimizer in the ReSound Azure hearing instruments can be set by the fitter to adjust the overall volume of the hearing instruments in use depending on the type of listening environment. Thus, the wishes of wearers who would like the volume for listening to soft speech a little higher can be accommodated without needing to manipulate the VC. Likewise, if they would like the volume to be lower in noisy situations, this can also be configured to be carried out automatically.

There is no need to make compromises on overall gain. In fact, the Environmental Optimizer does not even require that the device have a VC, so this feature works for all hearing instruments in the product line. The ReSound Aventa fitting software automatically applies volume adjustments in seven listening environments based on degree of hearing loss. These suggested values are derived from in-house clinical trials, and are presented in Table 1. The settings reflect group tendencies, and are therefore conservative.

The hearing care professional can utilize sliding controls in the software to take personal volume preferences into account, as illustrated in Figure 2. When the hearing aid is being worn, the volume change is effected within several seconds when a new listening environment is identified.

Classifying Environments

|

| TABLE 2. The Environmental Classifier uses sound input type and loudness to classify into seven different categories. |

The success of the Environmental Optimizer is dependent on the ability of the hearing instrument to accurately and consistently identify acoustic environments, and to characterize these environments in terms that will be meaningful for the wearer. The Environmental Classification scheme developed for the ReSound Azure hearing instruments considers acoustic factors that are of importance to all hearing instrument wearers:

- Is there sound above an ambient level?

- Is there speech present?

- Are there other background noises present?

- What are the levels of the sounds present?

Table 2 shows the information used to categorize sound inputs into the seven listening environments.

The classification scheme illustrated in Table 2 is deceptively simple. In fact, the Environmental Classifier employs sophisticated speech and noise detection algorithms based on frequency content and spectral balance, as well as the temporal properties of the incoming sound, to determine the nature of the acoustic surroundings. Furthermore, the classification does not occur on the basis of stringent predetermined criteria, but rather on the basis of probabilistic models, resulting in classification of listening environments that has shown greater consistency with listener perception.

The quality of this classification method was investigated by Tchorz et al9 who compared two environmental classification systems for two state-of-the-art hearing instruments. In this study, sound samples—which were subjectively judged as being either “quiet,” “speech-in-noise,” or “noise”—were presented in an anechoic chamber with the hearing instrument mounted on an KEMAR-like mannequin, and the output of the environmental classification was monitored. Both classification systems identified quiet acoustic environments correctly 100% of the time. However, in more acoustically varying environments, the Azure system showed a much higher degree of accuracy (Figure 3). Regardless of the type of environment, this classification method was highly consistent with the subjective classification of environments.

Learning Environmental Preferences

|

| FIGURE 4. The Environmental Optimizer is designed to offer extended levels of personalization to the individual wearer. |

As discussed previously, the Environmental Optimizer enables the fitter to personalize volume settings according to the acoustic environment for all Azure hearing instruments. As an additional feature, wearers of devices with volume controls can actually train their instruments to their preferred volume settings in their real-world surroundings. This has an obvious advantage for both the hearing instrument user, as well as the hearing care professional, because it reduces the need for fine-tuning visits to reach the best settings for the individual.

Through the volume control, the hearing instrument gradually learns and applies the wearer’s preferred volume settings in their actual listening environments. As this process occurs, less need to manipulate the volume control is experienced by the wearer. Because the learning is ongoing, it continually takes into account changes in volume preferences or hearing status as reflected in the way the volume control is used. As schematized in Figure 4, the Environmental Optimizer carries personalization of the fitting from group-based trends to the most individualized level.

Figure 5 illustrates how the volume setting can be trained by a particular listening situation. In this example, Mrs K puts on her Azure hearing instrument in the morning in the quiet of her bedroom and then enters her living room, where her husband looks up from his paper and starts a conversation. Mrs K increases her VC by 4 dB. At the same time, the Environmental Classifier identifies that the environment has changed from “Quiet” to “Soft Speech.” If we assume that this scenario occurs 4 days in a row, Figure 5 shows that Mrs K adjusts the VC wheel only in the first 3 days and that the degree to which she turns the volume control wheel is less each day. Smaller adjustments are required by Mrs K as the hearing instrument has learned her preference for this situation.

|

| FIGURE 5. For hearing instruments with volume controls, gradual and continuous learning is based on the environment and the wearer’s volume preferences. This example is of 4 dB learning for one environment. |

The volume change learned by the instrument is gradual, as can be seen by the smooth nature of the “Learned gain” curve in Figure 5. This smoothing is determined by a confidence parameter that is updated each time the volume control is adjusted by the user. If the user consistently alters the volume in the same way and in a particular acoustic environment, the confidence that this action truly reflects her intent and preference increases. This reflects a Bayesian approach to learning, as the confidence in the validity of the hypothesis—namely, that the volume change is truly preferred by the wearer—is changed as new evidence emerges.10-12

Inspired by this system of inductive logic, the action of the Environmental Optimizer is actually configured and controlled by the wearer. As a result, the hearing instrument defers to and supports the superior ability of the individual’s central auditory system to manage sound rather than making decisions for the individual based on theoretical considerations.

When the ReSound Azure hearing instrument is read with the ReSound Aventa fitting software, the net effect of Environmental Optimizer settings made by the fitter and the learned volume preferences configured by the wearer is displayed in the Environmental Optimizer screen (Figure 2). The learned values for each environment resulting from the wearer’s actions alone are displayed in the Onboard Analyzer screen.

Summary

This article examines one of ReSound Azure’s advanced hearing instrument features, the Environmental Optimizer, which are designed to provide intelligent systems. The Environmental Optimizer uses the Environmental Classifier to analyze the acoustic environment and categorize it into one of seven unique types. The Environmental Fine-Tuner is designed to allow each environment have the gain (ie, volume) automatically adjusted based on the individual requirements of the wearer.

The Environmental Learner, in turn, uses a Bayesian approach to probability theory to empower wearers to train their hearing instruments to the desired Environmental Fine-Tuner settings. These adjustments are intended to work together with the WDRC system, which selects gain for audibility and comfort based on the signal input level. The Environmental Optimizer then adjusts the gain based on the wearer’s preferences in different listening environments.

References

- Nabelek AK, Tucker FM, Letowski TR. Toleration of background noises: relationship with patterns of hearing aid use by elderly persons. J Sp Hear Res. 1991;34:679-685.

- Blood AJ, Zatorre RJ. Intensely pleasurable responses to music correlate with activity in brain regions implicated in reward & emotion. Proc Nat Acad Sci USA. 2001;98(20):11818-11823.

- Blood AF, Zatorre RJ, Bermudez P, Evans AC. Emotional responses to pleasant and unpleasant music correlate with activity in paralimbic brain regions. Nature Neurosci. 1999;2(4):382-387.

- Nabelek AK, Freyaldenhoven MC, Tampas JW, Burchfield SB, Muenchen RA. Acceptable noise level as a predictor of hearing aid use. J Am Acad Audiol. 2006;17:626-639.

- Kochkin S. Isolating the impact of the volume control on customer satisfaction. Hearing Review. 2003;10(1):26-35.

- Surr RK, Cord MT, Walden BE. Response of hearing aid wearers to the absence of a user-operated volume control. Hear Jour. 2001;54(4):32-36.

- Arlinger S, Billermark E. One year follow-up of users of a digital hearing aid. Brit J Audiol. 1999;33(4):223-232.

- Nelson J, Kiessling J, Dyrlund O, Groth J. Integrating hearing instrument datalogging into the clinic. Paper presented at: American Academy of Audiology convention; 2006; Minneapolis.

- Tchorz J. Datalogging functionality: benefit and accuracy of information for the hearing care professional. Paper presented at: 51st International Congress of Hearing Aid Acousticians; 2006; Frankfurt, Germany.

- Dijkstra TM, Ypma A, de Vries B, Leenen JRG. The learning hearing aid: common-sense reasoning in hearing aid circuits. Hearing Review. 2007;14(11):34-51.

- de Vries B, Ypma A, Dijkstra TM, Heskes TM. Paper presented at: Intl Hearing Aid Research Conference; 2006; Lake Tahoe, Calif.

- Ypma A, de Vries B, Geurts J. Robust volume control personalization from online preference feedback. In: Proceedings of IEEE Intl Workshop on Machine Learning for Signal Processing; 2006; Maynooth, Ireland:441-46.