Frequency transposition is currently receiving a fresh look by the hearing aid industry. There have been some interesting transpositional approaches over the years.

One method relates to enhanced intelligibility and the neurobiology of hearing using amplitude modulation (AM). Current speech processing approaches involve the capture of high frequencies and downshifting them to lower regions based on the assumption of the existence of a more substantial sensory neural base for coding in that region. Alternatively, upshifting high frequencies using AM has also been applied, albeit in rarer instances, in hearing remediation. Taken together, there are a number of frequency transposition options.

Options in Transposition

In the case of severe high-frequency hearing loss, the conventional wisdom is to avoid amplifying in “dead regions” because there is no hair cell transduction possible, thus no benefit.1 In fact, amplification in a “dead region” where both the outer and inner hair cells are destroyed, can result in less overall discrimination and should be avoided. The transpositional options are limited to:

- Stimulating regions adjacent to dead regions (“islands of hearing”), or near the edges of the dead regions in the so-called “off-frequency area”;

- Redistributing the high frequencies over a broad area of the functional (nondead) area where the hair cell population is relatively intact, or

- Eliminating transposition by simply restricting amplification to the area of the basilar membrane where there are no dead regions.

Innovations in the 1970s-1980s

Theoretically, there is a fourth option: stimulating the base of the cochlea, but excluding all dead regions. This was pursued vigorously more than 25 years ago in select cases of deaf individuals with good hearing thresholds in the range of 8 kHz to 14 kHz. These individuals were remarkable in that they were profoundly deaf in the audiometric range, but exhibited good speech comprehension and expression.2

Custom hearing aids for each individual were fashioned using AM.3 In AM there are two components: a carrier and a modulator that are multiplied. The carrier sets the center frequency, and the modulator the bandwidth. Thus, in this application, the carrier would be set within the range of good high-frequency hearing and modulated by full spectrum speech. Speech with a bandwidth of 300 Hz to 3,000 Hz, multiplied by a carrier of, say 10,000, would result in the carrier plus and minus the modulator—or 7,000-9,700 Hz (lower sideband) and 10,300-13,000 Hz (upper sideband). The upper sideband modulated speech would be audible to this unique group of patients (typically, the carrier and lower sideband are suppressed, leaving only the upper sideband).

The concept was to use AM to frequency-transpose speech above the dead regions onto the cochlea base.4 With the advent of more powerful hearing instruments with higher-frequency responses as alternatives in the late 1980s, these AM custom aids were no longer deemed necessary, principally due to the low demand and custom cost.

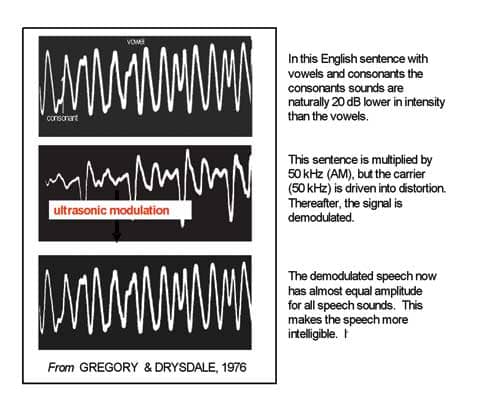

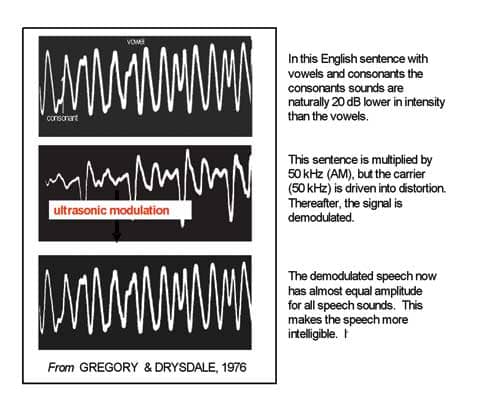

About the same time, another modulation approach was suggested for application with the vast majority of profoundly deaf who had no high-frequency residual hearing. Gregory and Drysdale5 proposed modulating speech by ultrasound, then intentionally driving the carrier into distortion, which would increase the energy in relatively weak speech sidebands (the energy is equally divided between the carrier and the two sidebands combined). The modulator (speech) was then demodulated, much like an AM radio station signal, such that the original speech frequencies would reappear with an equal amplitude profile.

Peak-clipping the carrier before demodulation resulted in an improved speech signal with amplitude compression for the vowels. That is, the carrier overdrive reduced the amplitude disparity between consonants and vowels to just a few dB, making sibilants and fricatives more salient (Figure 1). The sibilant and fricative sounds are very difficult to detect with limited hearing.

|

| Figure 1. After the speech is modulated by an ultrasonic carrier, the carrier is peak-clipped, then demodulated. The carrier clipping results in almost equal amplitude among all the vowels and consonants, making the latter more perceptually salient than if the vowels had the natural 20 dB higher amplitude. |

This speech enhancement effect can be readily demonstrated using diode demodulation, and AM is currently a useful lab procedure. I routinely use an analog device (Figure 2) in which I can control the number of sidebands, the depth of modulation, and the spectrum of the modulator. Once satisfied with the AM parameters, all are generated using software calculations on chips for wearable technology. This idea of “squeezing speech in the deaf ear” never went beyond the laboratory for hearing aid use, and it too was ultimately discarded with the advances of conventional hearing aids. Nonetheless, if the speech is filtered before modulating, high-gain, narrow-bandwidth, high-frequency stimulation can be provided for individuals with less hearing impairment using this technique.

|

| Figure 2. The laboratory apparatus for controlling various parameters of amplitude modulation is shown. |

High-Frequency Speech Cues

Modulation-based hearing aids rapidly lost ground to conventional hearing aids; that is not to say that hearing aids of that period had all the requisite high-frequency power by today’s standards, but they were sufficient to induce enough speech cues to provide utility in an aural world.

Just how much high-frequency amplification and in what range that would be sufficient to advance hearing aid performance was a problem seeking a solution. Boothroyd and Medwetsky,6 using the sibilant /s/, found its high frequency content varied with the vowel context between 5 kHz and 8 kHz: “These data suggest that the upper frequency limit of a high-fidelity hearing aid should be in the region of 10 kHz. If this cannot be accomplished with direct amplification, an alternative might be the selective use of frequency transposition.”6

There are instruments that have extended high-frequency responses exceeding the Boothroyd and Medwetsky6 proposed criterion of 10 kHz, but that does not necessarily negate the use of upshifted frequency transposition if the patient has intact high-frequency hearing to some degree and speech perceptual difficulties.

The general concept of transposition is shown in Figure 3a-b. The general fitting rule is that the frequency region of AM should fall within the functional sensory area of the cochlea, avoiding dead regions. The identification of dead regions is somewhat complex, typically involving psychoacoustic tuning curves. For patients with hearing thresholds over 90 dBHL, such nonsensory areas are assumed to exist. The “quick fix” solution is to slide the carrier in frequency, then rely on the patient’s response as a measure of fitting benefit.

|

| Figure 3a-b.(Click to enlarge) The concept of stimulating in an “island of hearing” and avoiding dead regions is depicted in Figure 3a (left panel). The place on the basilar membrane sets the carrier frequency, and the size of the island of hearing sets the bandwidth limitation. The two characteristics of AM are depicted in Figure 3b (right panel). The upper tracing is the time waveform of the speech sample, whereas the lower signal is the frequency spectrum, which in this case is designed to be very narrow. The ordinate is relative energy and the abscissa is frequency (in Hz). |

Selecting islands of hearing while avoiding dead areas is time-consuming, although simpler procedures like those detailed by Moore et al7 offer promise. Shifting the carrier is simply a matter of changing the frequency, and varying the bandwidth is a matter of selecting the settings on the speech filter. Trial and error using the patient’s subjective reports as guidance may be sufficient. This AM technique is not available in conventional aids; however, a hearing aid based on this type of modulation is possible.8

Since it may not be desirable to deliver all the speech frequencies to a basilar membrane with diffuse functional hair cell populations, it is possible to extract the “essence” of a sibilant or fricative sound by filtering, then modulate it on a carrier to deliver a fixed bandwidth signal onto the precise functional areas of the cochlear base. The narrower the speech spectrum to be modulated, the narrower the stimulation in the cochlea (with the understanding that the intensity can shift places in the cochlea). The bandwidth of the modulation can be reduced until it is very narrow (Figure 3b). Sibilant perception is very robust; only some spectral content is needed in the context of running speech for cuing /s/, /sh/, or /f/.

So what exactly is being transposed? Yes, it is the frequency (ie, frequency spectrum in Figure 3b), but it is also the time envelope of the speech sample. Time cues are very powerful in determining intelligibility, especially within a restricted hearing range. The envelope is the time domain manifestation of the spectrum and is the complement of frequency (ie, time- and frequency-related). In fact, time cues are very effective in parsing spectrally similar sounds such as /sa/ and /sta/, even in the normal-hearing ear. The stop consonant /t/ creates a very brief silent period in what would otherwise sound like /sa/; the silent cue is robustly carried by the envelope.

By identifying islands of high-frequency hearing, AM techniques can be used to deliver high-frequency speech cues to aid comprehension. As if that were not sufficient in its own right, there is a second advantage in stimulating the remaining functioning sensory cells in the cochlea.

High-Frequency Stimulation as Therapy for Hearing Loss and Tinnitus

Two recent studies by Noreña and colleagues9,10 are important in this regard. It appears likely that the central auditory characteristics of noise-induced hearing loss are 1) reorganization of the tonotopic map in the auditory cortex, and 2) increased brainstem neuron spontaneous firing rate resulting in neural synchrony. With hearing loss, the neurons in the cortex reorganize, shifting their “best response” lower in frequency, approximating the new sensitivity of the ear. Keeping cats, after noise trauma, in a high-frequency enriched environment prevented tonotopic map reorganization and reduced the expected hearing loss due to the noise relative to cats kept in quiet.

That is to say, high-frequency stimulation maintained normal cortical organization and essentially improved hearing (by reducing expected hearing loss). Noise-exposed cats kept in the quiet or a low-frequency environment showed increased spontaneous firing rate and synchrony of firing. However, exposed cats kept in the high-frequency environment displayed normal spontaneous firing rates. The authors interpreted this as an indication of the absence of tinnitus. Goldstein et al11 verified the same hearing and tinnitus improvement in hearing-impaired patients applying selected high-frequency stimulation using AM. Low-level (10 dBSL) stimulation for 8 weeks reduced tinnitus and improved high-frequency hearing.

The hearing improvement is probably not due to a structural change in the periphery, but rather may be due to increased neural gain secondary to either maintaining the neural map or a reversal of hearing loss-induced reprogramming of the cortical map. Neurons that revert to the original best frequency would, theoretically, exhibit modest threshold improvements (10 dB to 15 dB) that could be reflected in the audiogram.

Obviously, this mechanism simply requires more study. However, it is possible to design a hearing aid that helps compensate for impaired speech perception, aids in tinnitus adjustment, and possibly improves high-frequency hearing.

What Else Is Possible?

Individuals with severe deafness have been shown to benefit from an AM hearing aid,12 but that application requires special instrumentation and stimulation by bone conduction.

Individuals with steeply sloping losses with poor hearing over 2 kHz can also benefit by stimulation in the region of the slope. This is the type of case Kuk et al13 describes, in which an octave band of high speech is linearly transposed into the range of existing high frequencies. Since filtered speech components are all coherent signals derived from the same sample, AM upshifting can be added to provide complementary time cues to commercially available frequency transposition hearing aids, as well as possibly provide high-frequency therapy. The utility of this hybrid approach has not been examined, but is certainly intriguing.

Just as multiple channels provide custom frequency options in standard aids, multiple channels can also be applied to an AM or a hybrid AM aid. There is no reason to limit the carrier to one frequency. Two or more carriers can be used with bandwidths set to encompass /s/ and /f/, for example.

Multiple carriers, providing various bandwidths of speech cues, could provide more individual fitting options. These speech modulation signals would all be additive in the brain, thereby potentially enhancing speech perception. If a carrier modulated by speech is slowly swept from 3 kHz to 15 kHz, the speech “sounds the same” with the exception of variations in loudness due to changes in resonances in the transducer and ear sensitivity. Thus, only amplitude balancing is required to use multiple speech cues with multiple channels.

|

| Audible Ultrasound… Revisited. By Martin L. Lenhardt, PhD. Hearing Review. 1998;5(3):50-52. |

Central Benefits of Transposition

The central auditory system with a normal frequency map might be assumed to favor good speech perception. Clearly, the prevention of high spontaneous firing rates in the auditory brainstem would also favor speech perception. Thus, preserving the cortical map and reducing spontaneous firing rates may be achieved with just a small part of the speech spectrum modulated onto the audible high frequencies. Multiple channels hold even more promise, either as stand-alone devices or as hybrids. The only caution is not to risk loss of intelligibility in the process, which should be preventable with careful selection of AM parameters.

Hearing aids may, in fact, really become brain aids. As William G. Hardy told us almost a half century ago: “Hearing is not in the ear but in the brain.”

Martin L. Lenhardt, PhD, AuD, is professor of Biomedical Engineering, Otolaryngology and Emergency Medicine at Virginia Commonwealth University and president of Ceres Biotechnology, LLC, Richmond, Va.

References

- Moore B. Dead regions in the cochlea: conceptual foundations, diagnosis, and clinical applications. Ear Hear. 2004;25(2):98-116.

- Berlin C, Wexler K, Jerger J, Halperin H, Smith S. Superior ultra-audiometric hearing: a new type of hearing loss which correlates highly with unusually good speech in the “profoundly deaf.” Otolaryngology. 1978;86(1):111-116.

- Collins M, Cullen J, Berlin C. Auditory signal processing in a hearing-impaired subject with residual ultra-audiometric hearing. Audiology. 1981;20:347-361.

- Berlin C. Unusual residual high-frequency hearing. Seminars in Hearing. 1985;6:389-395.

- Gregory R, Drysdale A. Squeezing speech in the deaf ear. Nature. 1976;264:748-751.

- Boothroyd A, Medwetsky L. Spectral distribution of /s/ and the frequency response of hearing aids. Ear Hear.1992;3(3):150-157.

- Moore B, Glasberg B, Stone M. New version of the TEN test with calibration in dB HL. Ear Hear. 2004;25(5):478-487.

- Lenhardt M. Upper audio range hearing apparatus and method. US Patent #6,731,769.

- Noreña A, Arnaud J, Eggermont J. Enriched acoustic environment after noise trauma abolishes neural signs of tinnitus. NeuroReport. 2006;17:559-563.

- Noreña A, Eggermont J. Changes in spontaneous neural activity immediately after an acoustic trauma: implications for neural correlates of tinnitus. Hear Res. 2003;183:137–153.

- Goldstein B, Lenhardt M. Shulman A. Tinnitus improvement with ultra high frequency vibration therapy. Int Tinnitus J. 2005;11(1):14-22.

- Lenhardt ML, Skellett R, Wang P, Clarke AM. Human ultrasonic speech perception. Science. 1991;253:82-85.

- Kuk F, Keenen D, Peeters H, Korhonen P, Hau O, Anderson H. Critical factors in ensuring efficacy of frequency transposition. Hearing Review. 2007;14(3):60-66.

Correspondence can be addressed to [email protected] or Martin L. Lenhardt, AuD, PhD, at .